Subsections of

Subsections of Introduction

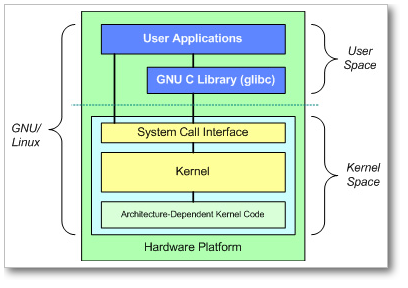

Linux Kernel Architecture

At the top is the user, or application, space. This is where the user applications are executed. Below the user space is the kernel space.

There is also the GNU C Library (glibc). This provides the system call interface that connects to the kernel and provides the mechanism to transition between the user-space application and the kernel. This is important because the kernel and user application occupy different protected address spaces. And while each user-space process occupies its own virtual address space, the kernel occupies a single address space.

The Linux kernel can be further divided into three gross levels.

- At the top is the system call interface, which implements the basic functions such as read and write.

- Below the system call interface is the kernel code, which can be more accurately defined as the architecture-independent kernel code. This code is common to all of the processor architectures supported by Linux.

- Below this is the architecture-dependent code, which forms what is more commonly called a BSP (Board Support Package). This code serves as the processor and platform-specific code for the given architecture.

The Linux kernel implements a number of important architectural attributes. At a high level, and at lower levels, the kernel is layered into a number of distinct subsystems.

Linux Namespaces

Namespaces are a feature of the Linux kernel that partitions kernel resources such that one set of processes sees one set of resources while another set of processes sees a different set of resources. The feature works by having the same name space for these resources in the various sets of processes, but those names referring to distinct resources. Examples of resource names that can exist in multiple spaces, so that the named resources are partitioned, are process IDs, hostnames, user IDs, file names, and some names associated with network access, and interprocess communication.

Namespaces are a fundamental aspect of containers on Linux.

| Namespace | Constant | Isolates |

|---|---|---|

| Cgroup | CLONE_NEWCGROUP | Cgroup root directory |

| IPC | CLONE_NEWIPC | System V IPC, POSIX message queues |

| Network | CLONE_NEWNET | Network devices, stacks, ports, etc. |

| Mount | CLONE_NEWNS | Mount points |

| PID | CLONE_NEWPID | Process IDs |

| User | CLONE_NEWUSER | User and group IDs |

| UTS | CLONE_NEWUTS | Hostname and NIS domain name |

The kernel assigns each process a symbolic link per namespace kind in /proc/<pid>/ns/. The inode number pointed to by this symlink is the same for each process in this namespace. This uniquely identifies each namespace by the inode number pointed to by one of its symlinks.

Reading the symlink via readlink returns a string containing the namespace kind name and the inode number of the namespace.

CGroups

cgroups (abbreviated from control groups) is a Linux kernel feature that limits, accounts for, and isolates the resource usage (CPU, memory, disk I/O, network, etc.) of a collection of processes.

Resource limiting

groups can be set to not exceed a configured memory limit

Prioritization

Some groups may get a larger share of CPU utilization or disk I/O throughput

Accounting

Measures a group’s resource usage, which may be used

Control

Freezing groups of processes, their checkpointing and restarting

You can read and explore more about cGroups in this post

Container from scratch

Using namespaces , we can start a process which will be completely isolated from other processes running in the system.

Create root File System

Create directory to store rootfs contents

$ mkdir -p /root/busybox/rootfs

$ CONTAINER_ROOT=/root/busybox/rootfs

$ cd ${CONTAINER_ROOT}Download busybox binary

$ wget https://busybox.net/downloads/binaries/1.28.1-defconfig-multiarch/busybox-x86_64Create needed directories and symlinks

$ mv busybox-x86_64 busybox

$ chmod 755 busybox

$ mkdir bin

$ mkdir proc

$ mkdir sys

$ mkdir tmp

$ for i in $(./busybox --list)

do

ln -s /busybox bin/$i

doneStart Container

Start a shell in new contianer

$ unshare --mount --uts --ipc --net --pid --fork --user --map-root-user chroot ${CONTAINER_ROOT} /bin/shMount essential kernel structures

$ mount -t proc none /proc

$ mount -t sysfs none /sys

$ mount -t tmpfs none /tmpConfigure networking

From Host system , create a veth pair and then map that to container

$ sudo ip link add vethlocal type veth peer name vethNS

$ sudo ip link set vethlocal up

$ sudo ip link set vethNS up

$ sudo ps -ef |grep '/bin/sh'

$ sudo ip link set vethNS netns <pid of /bin/sh>From container , execute ip link

What is Docker

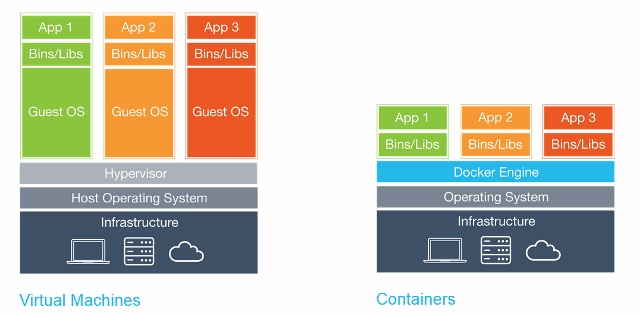

Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and ship it all out as one package.

In a way, Docker is a bit like a virtual machine. But unlike a virtual machine, rather than creating a whole virtual operating system, Docker allows applications to use the same Linux kernel as the system that they’re running on and only requires applications be shipped with things not already running on the host computer. This gives a significant performance boost and reduces the size of the application.

Kubernetes

Pet vs Cattle.

In the pets service model, each pet server is given a loving names like zeus, ares, hades, poseidon, and athena. They are “unique, lovingly hand-raised, and cared for, and when they get sick, you nurse them back to health”. You scale these up by making them bigger, and when they are unavailable, everyone notices.

In the cattle service model, the servers are given identification numbers like web-01, web-02, web-03, web-04, and web-05, much the same way cattle are given numbers tagged to their ear. Each server is “almost identical to each other” and “when one gets sick, you replace it with another one”. You scale these by creating more of them, and when one is unavailable, no one notices.

Kubernetes is a portable, extensible open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

Google open-sourced the Kubernetes project in 2014. Kubernetes builds upon a decade and a half of experience that Google has with running production workloads at scale, combined with best-of-breed ideas and practices from the community

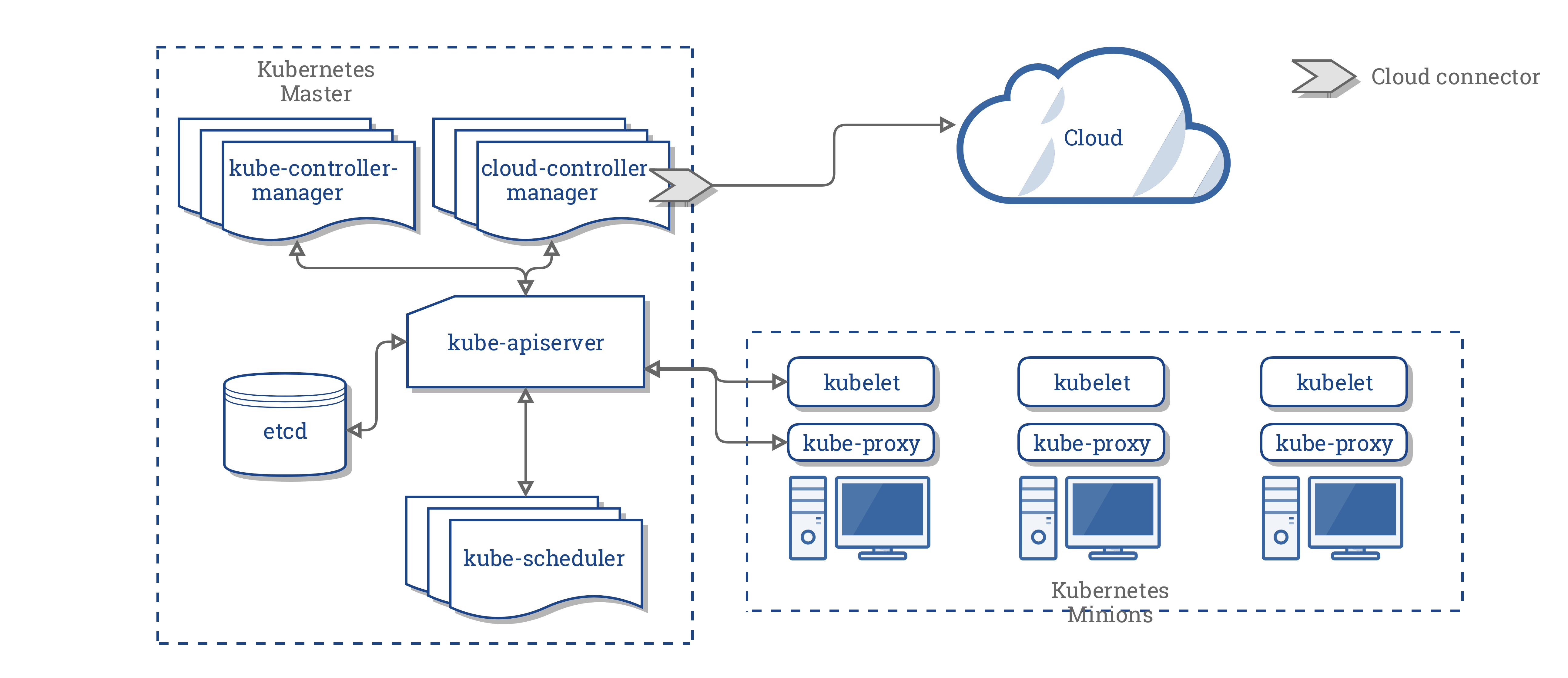

Kubernetes Architecture

Container runtime

Docker , rkt , containerd or any OCI compliant runtime which will download image , configures network , mount volumes and assist container life cycle management.

kubelet

Responsible for instructing container runtime to start , stop or modify a container

kube-proxy

Manage service IPs and iptables rules

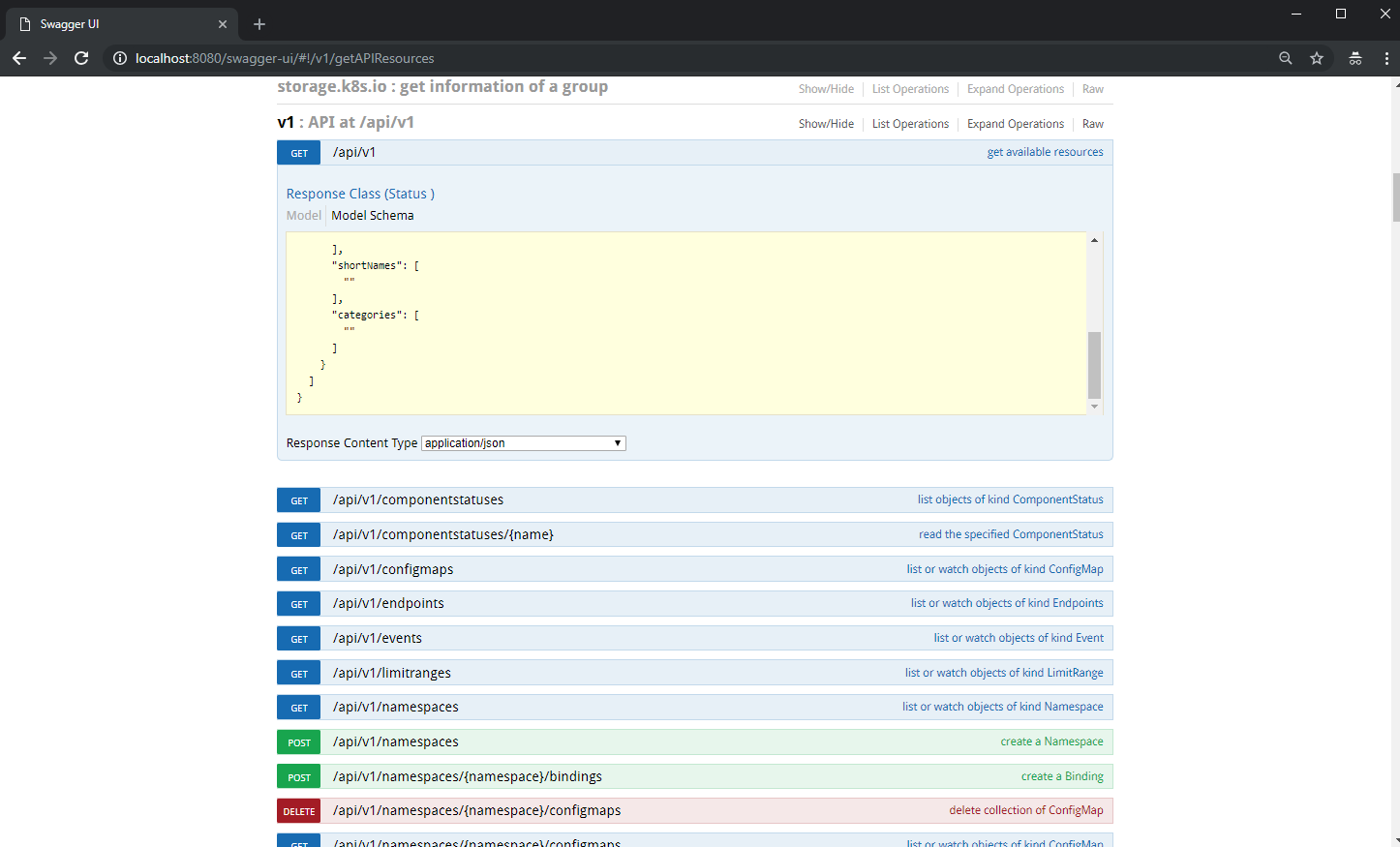

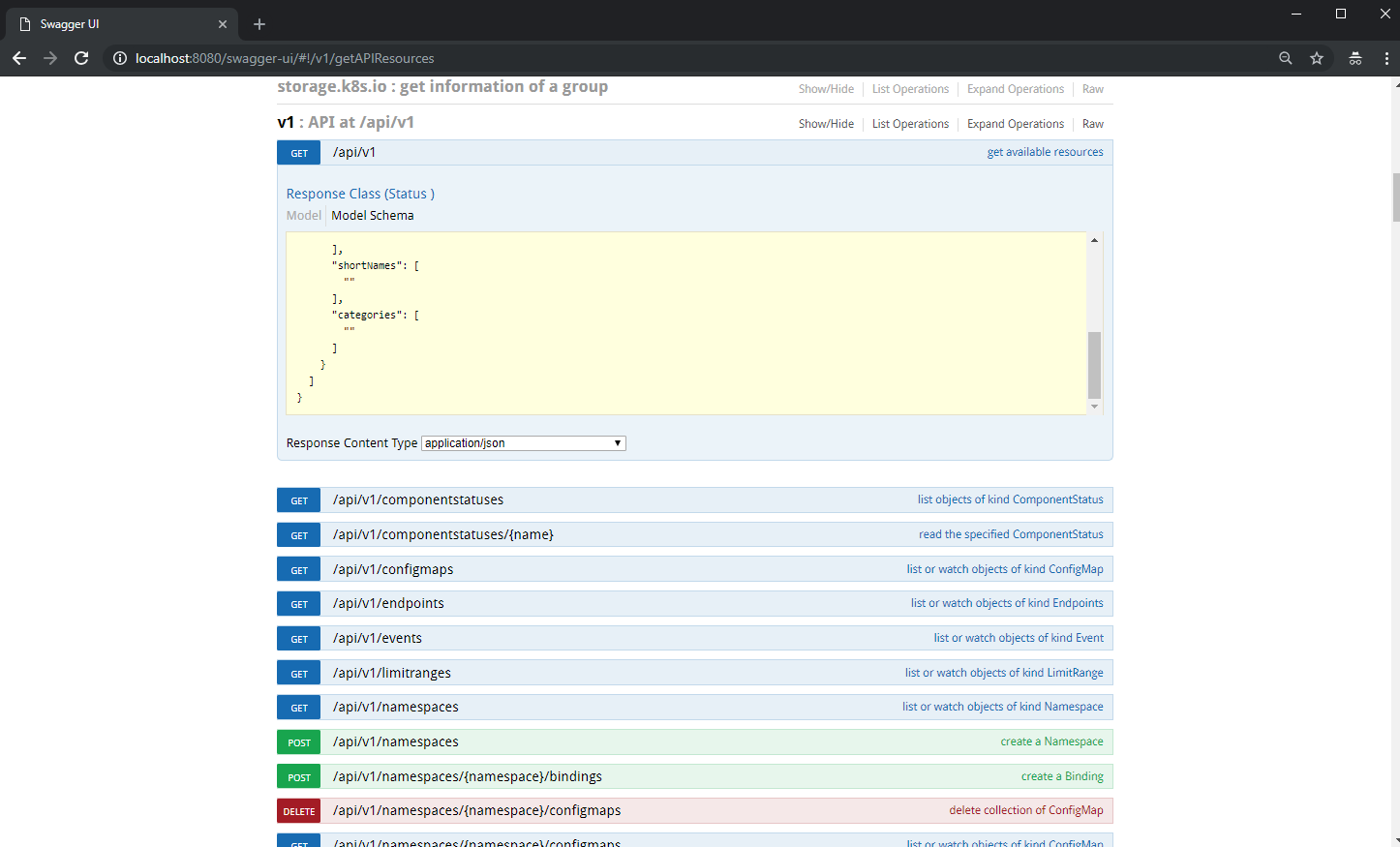

kube-apiserver

API server interacts with all other components in cluster All client interactions will happen via API server

kube-scheduler

Responsible for scheduling workload on minions or worker nodes based on resource constraints

kube-controller-manager

Responsible for monitoring different containers in reconciliation loop Will discuss more about different controllers later in this course

etcd

Persistent store where we store all configurations and cluster state

cloud-controller-manager

Cloud vendor specific controller and cloud vendor is Responsible to develop this program

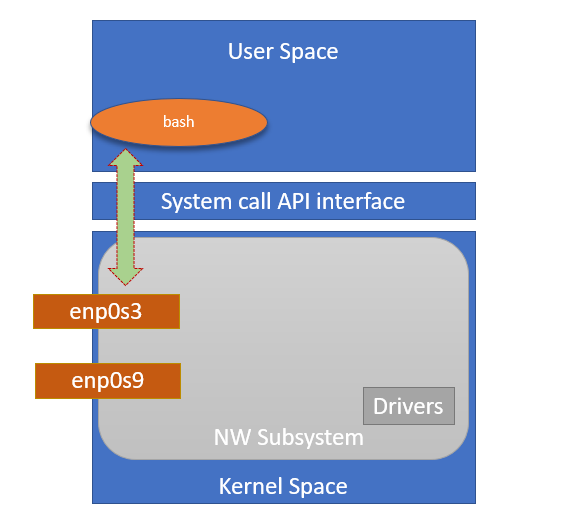

Container Networking

We need to access the container from outside world and the container running on different hosts have to communicate each other.

Here we will see how can we do it with bridging.

Traditional networking

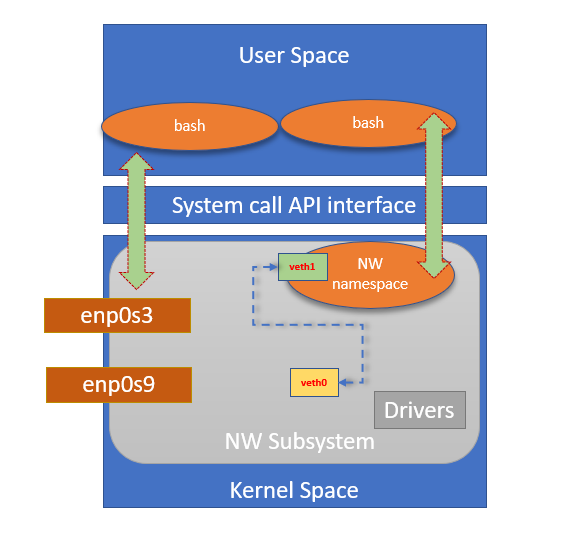

Create a veth pair on Host.

$ sudo ip link add veth0 type veth peer name veth1

$ sudo ip link showCreate a network namespace

$ sudo ip netns add bash-nw-namespace

$ sudo ip netns showConnect one end to namespace

$ sudo ip link set veth1 netns bash-nw-namespace

$ sudo ip link listResulting network

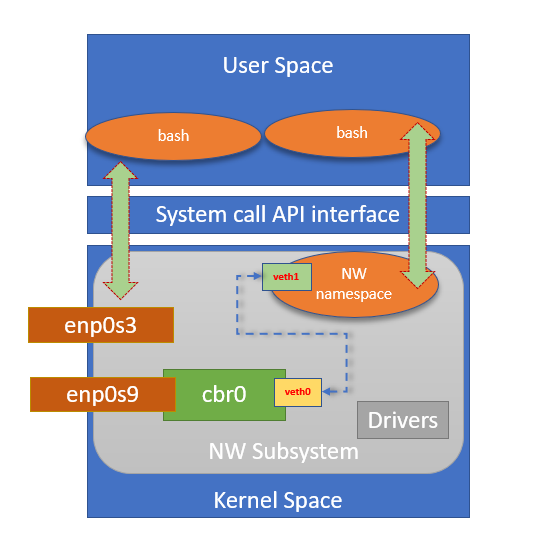

Create a Bridge interface

$ sudo brctl addbr cbr0Add an external interface to bridge

$ sudo brctl addif cbr0 enp0s9

$ sudo brctl showConnect other end to a switch

$ sudo brctl addif cbr0 veth0

$ sudo brctl showResulting network

Assign IP to interface

$ sudo ip netns exec bash-nw-namespace bash

$ sudo ip addr add 192.168.56.10/24 dev veth1

$ sudo ip link set lo up

$ sudo ip link set dev veth1 upAccess container IP from outside

Like bridging , we can opt other networking solutions.

Later we will see how Weave Network and Calico plugins works. You may read bit more on Docker networking basics on below blog post

Installation

- In this chapter we will install VirtualBox and setup networking.

- We will learn how to install and configure Docker.

- Also we will install a two node kubernetes cluster using kubeadm.

Subsections of Installation

Install VirtualBox

Download the latest VBox installer and VBox Extension Pack

Installation procedure is available in below link

VirtualBox Network Configuration

- Create HostOnly network ( Default will be 192.168.56.0/24)

- Open Virtual Box

- Got to menu and navigate to File ->Host Network Manager

- Then click “Create” This will create a Host-Only Network.

DHCP should be disabled on this network.

Internet access is needed on all VMs (for downloading needed binaries).

Make sure you can see the NAT network.(If not , create one).

| VBox Host Networking | |

|---|---|

| HostOnly | 192.168.56.0/24 |

| NAT | VBOX Defined |

Ubuntu 16.04 Installation

- Download Ubuntu 16.04 ISO http://releases.ubuntu.com/16.04/ubuntu-16.04.5-server-amd64.iso

Create a template VM which will be used to clone all needed VMs

- You need at least 50GB free space to host all VMs

- All VMs will be placed in a directory called (Don’t create these manually now!)

DRIVE_NAME:/VMs/(ReplaceDRIVE_NAMEwith a mount point or Driver name) - Install Ubuntu 16.04 with latest patches

- VM configuration

- VM Name :

k8s-master-01 - Memory : 2 GB

- CPU : 2

- Disk : 100GB

- HostOnly interface : 1 (ref. step 1).

- NAT network interface : 1

- VM Name :

By default , NAT will be the first in network adapter order , change it. NAT interface should be the second interface and Host-Only should be the first one

Install Ubuntu on this VM and go ahead with all default options

When asked, provide user name

k8sand set passwordMake sure to select the NAT interface as primary during installation.

Select below in

Software SelectionscreenManual Software Selection

OpenSSH Server

After restart , make sure NAT interface is up

Login to the template VM with user

k8sand execute below commands to install latest patches.

$ sudo apt-get update

$ sudo apt-get upgrade- Poweroff template VM

$ sudo poweroffClone VM

You may use VirtualBox GUI to create a full clone - Preferred You can use below commands to clone a VM - Execute it at your own risk ;)

- Open CMD and execute below commands to create all needed VMs.

You can replace the value of

DRIVER_NAMEwith a drive which is having enough free space (~50GB) - Windows

set DRIVE_NAME=D

cd C:\Program Files\Oracle\VirtualBox

VBoxManage.exe clonevm "k8s-master-01" --name "k8s-worker-01" --groups "/K8S Training" --basefolder "%DRIVE_NAME%:\VMs" --register- Mac or Linux (Need to test)

DRIVE_NAME=${HOME}

VBoxManage clonevm "k8s-master-01" --name "k8s-worker-01" --groups "/K8S Training" --basefolder ${DRIVE_NAME}/VMs" --registerStart VMs one by one and perform below

Execute below steps on both master and worker nodes

- Assign IP address and make sure it comes up at boot time.

$ sudo systemctl stop networking

$ sudo vi /etc/network/interfacesauto enp0s3 #<-- Make sure to use HostOnly interface (it can also be enp0s8)

iface enp0s3 inet static

address 192.168.56.X #<--- Replace X with corresponding IP octet

netmask 255.255.255.0$ sudo systemctl restart networkingYou may access the VM using the IP via SSH and can complete all remaining steps from that session (for copy paste :) )

- Change Host name

Execute below steps only on worker node

$ HOST_NAME=<host name> # <--- Replace <host name> with corresponding one$ sudo hostnamectl set-hostname ${HOST_NAME} --static --transient- Regenrate SSH Keys

$ sudo /bin/rm -v /etc/ssh/ssh_host_*

$ sudo dpkg-reconfigure openssh-server- Change iSCSI initiator IQN

$ sudo vi /etc/iscsi/initiatorname.iscsiInitiatorName=iqn.1993-08.org.debian:01:HOST_NAME #<--- Append HostName to have unique iscsi iqn- Change Machine UUID

$ sudo rm /etc/machine-id /var/lib/dbus/machine-id

$ sudo systemd-machine-id-setupExecute below steps on both master and worker nodes

Remove 127.0.1.1 entry from /etc/hosts

Add needed entries in /etc/hosts

$ sudo bash -c "cat <<EOF >>/etc/hosts

192.168.56.201 k8s-master-01

192.168.56.202 k8s-worker-01

EOF"- Add public DNS incase the local one is not responding in NAT

$ sudo bash -c "cat <<EOF >>/etc/resolvconf/resolv.conf.d/tail

nameserver 8.8.8.8

EOF"- Disable swap by commenting out swap_1 LV

$ sudo vi /etc/fstab# /dev/mapper/k8s--master--01--vg-swap_1 none swap sw 0 0- Reboot VMs

$ sudo rebootDo a ping test to make sure both VMs can reach each other.

Install Docker

In this session, we will install and setup docker in a simple and easy way on Ubuntu 16.04.

- Add gpg key to aptitude

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -- Add repository

$ sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"- Refresh repository

$ sudo apt-get update- Verify whether docker is available in repo or not

$ sudo apt-cache policy docker-cedocker-ce:

Installed: (none)

Candidate: 5:18.09.0~3-0~ubuntu-xenial

Version table:

5:18.09.0~3-0~ubuntu-xenial 500

...- Install docker

$ sudo apt-get install -y docker-ce- Make sure docker is running

$ sudo systemctl status docker● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2018-12-26 17:14:59 UTC; 4min 27s ago

Docs: https://docs.docker.com

Main PID: 1191 (dockerd)

Tasks: 10

Memory: 76.4M

CPU: 625ms

CGroup: /system.slice/docker.service

└─1191 /usr/bin/dockerd -H unix://

...- Add user to docker group so that this user can execute docker commands.

$ sudo usermod -aG docker ${USER}Logout the session and login again to refresh the group membership.

- Verify docker by executing info command.

$ docker info |grep 'Server Version'Server Version: 18.09.0

Setup Golang

- Download Golang tarball

$ curl -O https://dl.google.com/go/go1.11.4.linux-amd64.tar.gz- Extract the contents

$ tar -xvf go1.11.4.linux-amd64.tar.gz- Move the contents to /usr/local directory

$ sudo mv go /usr/local/- Add the environmental variable GOPATH to .profile

cat <<EOF >>~/.profile

export GOPATH=\$HOME/work

export PATH=\$PATH:/usr/local/go/bin:\$GOPATH/bin

EOF- Create the work directory

$ mkdir $HOME/work- Load the profile

$ source ~/.profile- Verify Golang setup

$ go versiongo version go1.11.4 linux/amd64

- Create a directory tree to map to a github repository

$ mkdir -p $GOPATH/src/github.com/ansilh/golang-demo- Create a hello world golang program

$ vi $GOPATH/src/github.com/ansilh/golang-demo/main.go- Paste below code

package main

import "fmt"

func main(){

fmt.Println("Hello World.!")

}- Build and install the program

go install github.com/ansilh/golang-demo

- Execute the program to see the output

$ golang-demoHello World.!

Build a Demo WebApp

- Create a directory for the demo app.

$ mkdir -p ${GOPATH}/src/github.com/ansilh/demo-webapp- Create demo-webapp.go file

$ vi ${GOPATH}/src/github.com/ansilh/demo-webapp/demo-webapp.gopackage main

import (

"fmt"

"net/http"

"log"

)

func demoDefault(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "404 - Page not found - This is a dummy default backend") // send data to client side

}

func main() {

http.HandleFunc("/", demoDefault) // set router

err := http.ListenAndServe(":9090", nil) // set listen port

if err != nil {

log.Fatal("ListenAndServe: ", err)

}

}- Build a static binary

$ cd $GOPATH/src/github.com/ansilh/demo-webapp

$ CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -a -installsuffix cgo -ldflags="-w -s" -o $GOPATH/bin/demo-webapp- Execute the program

$ demo-webappOpen the browser and check if you can see the response using IP:9090 If you see the output “404 – Page not found – This is a dummy default backend” indicates that the program is working

Press Ctrl+c to terminate the program

Build a Docker image

Create a Docker Hub account

- Let’s create a directory to store the Dockerfile

$ mkdir ~/demo-webapp- Copy the pre-built program

$ cp $GOPATH/bin/demo-webapp ~/demo-webapp/- Create a Dockerfile.

$ cd ~/demo-webapp/

$ vi DockerfileFROM scratch

LABEL maintainer="Ansil H"

LABEL email="ansilh@gmail.com"

COPY demo-webapp /

CMD ["/demo-webapp"]- Build the docker image

$ sudo docker build -t <docker login name>/demo-webapp .

Eg:-

$ sudo docker build -t ansilh/demo-webapp .- Login to Docker Hub using your credentials

$ docker login- Push image to Docker hub

$ docker push <docker login name>/demo-webapp

Eg:-

$ docker push ansilh/demo-webappCongratulations ! . Now the image you built is available in Docker Hub and we can use this image to run containers in upcoming sessions

Docker - Container management

Start a Container

- Here we map port 80 of host to port 9090 of cotainer

- Verify application from browser

- Press Ctrl+c to exit container

$ docker run -p 80:9090 ansilh/demo-webapp- Start a Container in detach mode

$ docker run -d -p 80:9090 ansilh/demo-webapp- List Container

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4c8364e0d031 ansilh/demo-webapp "/demo-webapp" 11 seconds ago Up 10 seconds 0.0.0.0:80->9090/tcp zen_gauss- List all containers including stopped containers

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4c8364e0d031 ansilh/demo-webapp "/demo-webapp" 2 minutes ago Up 2 minutes 0.0.0.0:80->9090/tcp zen_gauss

acb01851c20a ansilh/demo-webapp "/demo-webapp" 2 minutes ago Exited (2) 2 minutes ago condescending_antonelli- List resource usage (Press Ctrl+c to exit)

$ docker stats zen_gauss- Stop Container

$ docker stop zen_gauss- List images

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ansilh/demo-webapp latest b7c5e17ae85e 8 minutes ago 4.81MB- Remove containers

$ docker rm zen_gauss- Delete images

$ docker rmi ansilh/demo-webappInstall kubeadm

Verify the MAC address and product_uuid are unique for every node

(ip link or ifconfig -a and sudo cat /sys/class/dmi/id/product_uuid)

- Download pre-requisites

$ sudo apt-get update && sudo apt-get install -y apt-transport-https curl- Add gpg key for apt

$ curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg |sudo apt-key add -- Add apt repository

$ cat <<EOF |sudo tee -a /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF- Install kubelet , kubeadm and kubectl

$ sudo apt-get update

$ sudo apt-get install -y kubelet kubeadm kubectl

$ sudo apt-mark hold kubelet kubeadm kubectlRepeat the same steps on worker node

Deploy master Node

- Initialize kubeadm with pod IP range

$ sudo kubeadm init --apiserver-advertise-address=192.168.56.201 --pod-network-cidr=10.10.0.0/16 --service-cidr=192.168.10.0/24- Configure

kubectl

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config- Verify master node status

$ kubectl cluster-info- Output will be like below

Kubernetes master is running at https://192.168.56.201:6443

KubeDNS is running at https://192.168.56.201:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Move to next session to deploy network plugin.

Deploy Network Plugin - Calico

- Apply RBAC rules (More about RBAC will discuss later)

$ kubectl apply -f https://docs.projectcalico.org/v3.3/getting-started/kubernetes/installation/hosted/rbac-kdd.yaml- Download Calico deployment YAML

$ wget https://docs.projectcalico.org/v3.3/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml- Edit

CALICO_IPV4POOL_CIDRvalue to10.10.0.0/16

- name: CALICO_IPV4POOL_CIDR

value: "10.10.0.0/16"- Add

name: IP_AUTODETECTION_METHOD&value: "can-reach=192.168.56.1"(This IP should be the host only network ip on your laptop)

...

image: quay.io/calico/node:v3.3.2

env:

- name: IP_AUTODETECTION_METHOD

value: "can-reach=192.168.56.1"

...- Apply Deployment

$ kubectl apply -f calico.yaml- Make sure the

READYstatus should show same value on left and right side of/andPodSTATUSshould beRunning

$ kubectl get pods -n kube-system |nl1 NAME READY STATUS RESTARTS AGE

2 calico-node-2pwv9 2/2 Running 0 20m

3 coredns-86c58d9df4-d9q2l 1/1 Running 0 21m

4 coredns-86c58d9df4-rwv7r 1/1 Running 0 21m

5 etcd-k8s-master-01 1/1 Running 0 20m

6 kube-apiserver-k8s-master-01 1/1 Running 0 20m

7 kube-controller-manager-k8s-master-01 1/1 Running 0 20m

8 kube-proxy-m6m9n 1/1 Running 0 21m

9 kube-scheduler-k8s-master-01 1/1 Running 0 20mContact the Trainer if the output is not the expected one after few minutes (~3-4mins).

Add worker node to cluster

- Get discovery secret from Master node.

$ echo sha256:$(openssl x509 -in /etc/kubernetes/pki/ca.crt -noout -pubkey | openssl rsa -pubin -outform DER 2>/dev/null | sha256sum | cut -d' ' -f1)- Get node join token from Master node.

$ kubeadm token list |grep bootstra |awk '{print $1}'- Execute kubeadm command to add the Worker to cluster

$ sudo kubeadm join 192.168.56.201:6443 --token <token> --discovery-token-ca-cert-hash <discovery hash>- Verify system Pod status

$ kubectl get pods -n kube-system |nl- Output

1 NAME READY STATUS RESTARTS AGE

2 calico-node-2pwv9 2/2 Running 0 20m

3 calico-node-hwnfh 2/2 Running 0 19m

4 coredns-86c58d9df4-d9q2l 1/1 Running 0 21m

5 coredns-86c58d9df4-rwv7r 1/1 Running 0 21m

6 etcd-k8s-master-01 1/1 Running 0 20m

7 kube-apiserver-k8s-master-01 1/1 Running 0 20m

8 kube-controller-manager-k8s-master-01 1/1 Running 0 20m

9 kube-proxy-m6m9n 1/1 Running 0 21m

10 kube-proxy-shwgp 1/1 Running 0 19m

11 kube-scheduler-k8s-master-01 1/1 Running 0 20mPods & Nodes

In this session , we will explore Pods and Nodes.

We will also create a Coffee application Pod

Subsections of Pods & Nodes

Introduction

What is a Pod ?

A Pod is the basic building block of Kubernetes–the smallest and simplest unit in the Kubernetes object model that you create or deploy. A Pod represents a running process on your cluster

The “one-container-per-Pod” model is the most common Kubernetes use case; in this case, you can think of a Pod as a wrapper around a single container, and Kubernetes manages the Pods rather than the containers directly.

A Pod might encapsulate an application composed of multiple co-located containers that are tightly coupled and need to share resources. These co-located containers might form a single cohesive unit of service–one container serving files from a shared volume to the public, while a separate “sidecar” container refreshes or updates those files. The Pod wraps these containers and storage resources together as a single manageable entity.

What is a Node?

A Pod always runs on a Node. A Node is a worker machine in Kubernetes and may be either a virtual or a physical machine, depending on the cluster. Each Node is managed by the Master. A Node can have multiple pods, and the Kubernetes master automatically handles scheduling the pods across the Nodes in the cluster. The Master’s automatic scheduling takes into account the available resources on each Node.

Create a Pod - Declarative

After completing this session , you will be able to create Pod declaratively and will be able to login to check services running on other pods.

So lets get started.

Lets Check the running Pods

k8s@k8s-master-01:~$ kubectl get pods

No resources found.

k8s@k8s-master-01:~$Nothing

Lets create one using a YAML file

$ vi pod.yamlapiVersion: v1

kind: Pod

metadata:

name: coffee-app

spec:

containers:

- image: ansilh/demo-coffee

name: coffeeApply YAML using kubectl command

$ kubectl apply -f pod.yamlView status of Pod

Pod status is ContainerCreating

$ kubectl get podsOutput

NAME READY STATUS RESTARTS AGE

coffee-app 0/1 ContainerCreating 0 4s

Execute kubectl get pods after some time

Now Pod status will change to Running

$ kubectl get podsOutput

NAME READY STATUS RESTARTS AGE

coffee-app 1/1 Running 0 27s

Now we can see our first Pod

Get the IP address of Pod

$ kubectl get pods -o wideOutput

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coffee-app 1/1 Running 0 2m8s 192.168.1.7 k8s-worker-01 <none> <none>

Create a new CentOS container

$ vi centos-pod.yamlapiVersion: v1

kind: Pod

metadata:

name: centos-pod

spec:

containers:

- image: tutum/centos

name: centosApply the Yaml spec

$ kubectl apply -f centos-pod.yamlVerify the status of Pod

$ kubectl get podsNAME READY STATUS RESTARTS AGE

centos-pod 0/1 ContainerCreating 0 12s

coffee-app 1/1 Running 0 5m31s

After some time status will change to Running

$ kubectl get podsNAME READY STATUS RESTARTS AGE

centos-pod 1/1 Running 0 59s

coffee-app 1/1 Running 0 6m18sLogin to CentOS Pod

$ kubectl exec -it centos-pod -- /bin/bashVerify Coffee app using curl

$ curl -s 192.168.1.13:9090 |grep 'Serving'<html><head></head><title></title><body><div> <h2>Serving Coffee from</h2><h3>Pod:coffee-app</h3><h3>IP:192.168.1.13</h3><h3>Node:172.16.0.1</h3><img src="data:image/png;base64,

[root@centos-pod /]#

Delete pod

$ kubectl delete pod coffee-app centos-podpod "coffee-app" deleted

pod "centos-pod" deleted

Make sure not pod is running

$ kubectl get podsCreate a Pod - Imperative

Execute kubectl command to create a Pod.

$ kubectl run coffee --image=ansilh/demo-coffee --restart=Never

pod/coffee createdVerify Pod status

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coffee 0/1 ContainerCreating 0 6s <none> k8s-worker-01 <none> <none>

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coffee 1/1 Running 0 19s 192.168.1.15 k8s-worker-01 <none> <none>Start a CentOS container

$ kubectl run centos-pod --image=tutum/centos --restart=Never

pod/centos-pod createdverify status of the Pod ; it should be in Running

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

centos-pod 1/1 Running 0 25s

coffee 1/1 Running 0 2m10sLogon to CentOS Pod

$ kubectl exec -it centos-pod -- /bin/bash

[root@centos-pod /]#Verify Coffee App status

[root@centos-pod /]# curl -s 192.168.1.15:9090 |grep 'Serving Coffee'

<html><head></head><title></title><body><div> <h2>Serving Coffee from</h2><h3>Pod:coffee</h3><h3>IP:192.168.1.15</h3><h3>Node:172.16.0.1</h3><img src="data:image/png;base64,

[root@centos-pod /]# exitDelete pod

k8s@k8s-master-01:~$ kubectl delete pod coffee centos-pod

pod "coffee" deleted

pod "centos-pod" deleted

k8s@k8s-master-01:~$ kubectl get pods

No resources found.

k8s@k8s-master-01:~$Nodes

In this session , we will explore the node details

List nodes

$ k8s@k8s-master-01:~$ kubectl get nodesOutput

NAME STATUS ROLES AGE VERSION

k8s-master-01 Ready master 38h v1.13.1

k8s-worker-01 Ready <none> 38h v1.13.1

Extended listing

$ kubectl get nodes -o wideOutput

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master-01 Ready master 38h v1.13.1 192.168.56.201 <none> Ubuntu 16.04.5 LTS 4.4.0-131-generic docker://18.9.0

k8s-worker-01 Ready <none> 38h v1.13.1 192.168.56.202 <none> Ubuntu 16.04.5 LTS 4.4.0-131-generic docker://18.9.0

k8s@k8s-master-01:~$Details on a node

$ kubectl describe node k8s-master-01Output

Name: k8s-master-01

Roles: master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=k8s-master-01

node-role.kubernetes.io/master=

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 192.168.56.201/24

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Mon, 31 Dec 2018 02:10:05 +0530

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Tue, 01 Jan 2019 17:01:28 +0530 Mon, 31 Dec 2018 02:10:02 +0530 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Tue, 01 Jan 2019 17:01:28 +0530 Mon, 31 Dec 2018 02:10:02 +0530 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Tue, 01 Jan 2019 17:01:28 +0530 Mon, 31 Dec 2018 02:10:02 +0530 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Tue, 01 Jan 2019 17:01:28 +0530 Mon, 31 Dec 2018 22:59:35 +0530 KubeletReady kubelet is posting ready status. AppArmor enabled

Addresses:

InternalIP: 192.168.56.201

Hostname: k8s-master-01

Capacity:

cpu: 1

ephemeral-storage: 49732324Ki

hugepages-2Mi: 0

memory: 2048168Ki

pods: 110

Allocatable:

cpu: 1

ephemeral-storage: 45833309723

hugepages-2Mi: 0

memory: 1945768Ki

pods: 110

System Info:

Machine ID: 96cedf74a821722b0df5ee775c291ea2

System UUID: 90E04905-218D-4673-A911-9676A65B07C5

Boot ID: 14201246-ab82-421e-94f6-ff0d8ad3ba54

Kernel Version: 4.4.0-131-generic

OS Image: Ubuntu 16.04.5 LTS

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://18.9.0

Kubelet Version: v1.13.1

Kube-Proxy Version: v1.13.1

PodCIDR: 192.168.0.0/24

Non-terminated Pods: (6 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system calico-node-nkcrd 250m (25%) 0 (0%) 0 (0%) 0 (0%) 38h

kube-system etcd-k8s-master-01 0 (0%) 0 (0%) 0 (0%) 0 (0%) 38h

kube-system kube-apiserver-k8s-master-01 250m (25%) 0 (0%) 0 (0%) 0 (0%) 38h

kube-system kube-controller-manager-k8s-master-01 200m (20%) 0 (0%) 0 (0%) 0 (0%) 38h

kube-system kube-proxy-tzznm 0 (0%) 0 (0%) 0 (0%) 0 (0%) 38h

kube-system kube-scheduler-k8s-master-01 100m (10%) 0 (0%) 0 (0%) 0 (0%) 38h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 800m (80%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

Events: <none>We will discuss more about each of the fields on upcoming sessions.

For now lets discuss about Non-terminated Pods field;

Non-terminated Pods field

- Namespace : The namespace which the Pods were running .

The pods that we create will by default go to

defaultnamespace. - Name : Name of the Pod

- CPU Request : How much CPU resource requested by Pod during startup.

- CPU Limits : How much CPU the Pod can use.

- Memory Request : How much memory requested by Pod during startup.

- Memory Limits : How much memory the Pod can use.

Namespaces

What is a namespace

We have see namespaces in Linux , which ideally isolates objects and here also the concept is same but serves a different purpose. Suppose you have two departments in you organization and both departments have application which needs more fine grained control. We can use namespaces to separate the workload of each departments.

By default kubernetes will have three namespace

List namespace

$ kubectl get ns

NAME STATUS AGE

default Active 39h

kube-public Active 39h

kube-system Active 39hdefault : All Pods that we manually create will go to this namespace (There are ways to change it , but for now that is what it is). kube-public : All common workloads can be assigned to this namespace . Most of the time no-one use it. kube-system : Kubernetes specific Pods will be running on this namespace

List Pods in kube-system namespace

$ kubectl get pods --namespace=kube-system

NAME READY STATUS RESTARTS AGE

calico-node-n99tb 2/2 Running 0 38h

calico-node-nkcrd 2/2 Running 0 38h

coredns-86c58d9df4-4c22l 1/1 Running 0 39h

coredns-86c58d9df4-b49c2 1/1 Running 0 39h

etcd-k8s-master-01 1/1 Running 0 39h

kube-apiserver-k8s-master-01 1/1 Running 0 39h

kube-controller-manager-k8s-master-01 1/1 Running 0 39h

kube-proxy-s6hc4 1/1 Running 0 38h

kube-proxy-tzznm 1/1 Running 0 39h

kube-scheduler-k8s-master-01 1/1 Running 0 39hAs you can see , there are many Pods running in kube-system namespace

All these Pods were running with one or mode containers

If you see the calico-node-n99tb pod , the READY says 2/2 , which means two containers were running fine in this Pod

List all resources in a namespace

k8s@k8s-master-01:~$ kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/calico-node-kr5xg 2/2 Running 0 13m

pod/calico-node-lcpbw 2/2 Running 0 13m

pod/coredns-86c58d9df4-h8pjr 1/1 Running 6 26m

pod/coredns-86c58d9df4-xj24c 1/1 Running 6 26m

pod/etcd-k8s-master-01 1/1 Running 0 26m

pod/kube-apiserver-k8s-master-01 1/1 Running 0 26m

pod/kube-controller-manager-k8s-master-01 1/1 Running 0 26m

pod/kube-proxy-fl7rj 1/1 Running 0 26m

pod/kube-proxy-q6w9l 1/1 Running 0 26m

pod/kube-scheduler-k8s-master-01 1/1 Running 0 26m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/calico-typha ClusterIP 172.16.244.140 <none> 5473/TCP 13m

service/kube-dns ClusterIP 172.16.0.10 <none> 53/UDP,53/TCP 27m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/calico-node 2 2 2 2 2 beta.kubernetes.io/os=linux 13m

daemonset.apps/kube-proxy 2 2 2 2 2 <none> 27m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/calico-typha 0/0 0 0 13m

deployment.apps/coredns 2/2 2 2 27m

NAME DESIRED CURRENT READY AGE

replicaset.apps/calico-typha-5fc4874c76 0 0 0 13m

replicaset.apps/coredns-86c58d9df4 2 2 2 26m

k8s@k8s-master-01:~$Self Healing - Readiness

Readiness Probe

We have seen that our coffee application was listening on port 9090. Lets assume that the application is not coming up but Pod status showing running. Everyone will think that application is up. You entire application stack might get affected because of this.

So here comes the question , “How can I make sure my application is started, not just the Pod ?”

Here we can use Pod spec, Readiness probe.

Official detention of readinessProbe is , “Periodic probe of container service readiness”.

Lets rewrite the Pod specification of Coffee App and add a readiness Probe.

$ vi pod-readiness.yamlapiVersion: v1

kind: Pod

metadata:

name: coffee-app

spec:

containers:

- image: ansilh/demo-coffee

name: coffee

readinessProbe:

initialDelaySeconds: 10

httpGet:

port: 9090Apply Yaml

$ kubectl apply -f pod-readiness.yaml

pod/coffee-app createdVerify Pod status

Try to identify the difference.

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

coffee-app 0/1 ContainerCreating 0 3s

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

coffee-app 0/1 Running 0 25s

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

coffee-app 1/1 Running 0 32sDelete the Pod

Yes ,we can delete the objects using the same yaml which we used to create/apply it

$ kubectl delete -f pod-readiness.yaml

pod "coffee-app" deleted

$Probe Tuning.

failureThreshold <integer>

Minimum consecutive failures for the probe to be considered failed after

having succeeded. Defaults to 3. Minimum value is 1.

initialDelaySeconds <integer>

Number of seconds after the container has started before liveness probes

are initiated. More info:

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#container-probes

periodSeconds <integer>

How often (in seconds) to perform the probe. Default to 10 seconds. Minimum

value is 1.

timeoutSeconds <integer>

Number of seconds after which the probe times out. Defaults to 1 second.

Minimum value is 1. More info:

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#container-probesSelf Healing - Liveness

Liveness Probe

Lets assume the application failed after readiness probe execution completes Again we are back to service unavailability

To avoid this , we need a liveness check which will do a periodic health check after Pod start running or readiness probe completes.

Lets rewrite the Pod specification of Coffee App and add a liveness Probe.

$ vi pod-liveiness.yamlapiVersion: v1

kind: Pod

metadata:

name: coffee-app

spec:

containers:

- image: ansilh/demo-coffee

name: coffee

readinessProbe:

initialDelaySeconds: 10

httpGet:

port: 9090

livenessProbe:

periodSeconds: 5

httpGet:

port: 9090Create Pod

$ kubectl create -f pod-liveness.yamlResource Allocation

Limits

We can limit the CPU and Memory usage of a container so that one

Lets create the coffee Pod again with CPU and Memory limits

apiVersion: v1

kind: Pod

metadata:

labels:

name: coffee-limits

spec:

containers:

- image: ansilh/demo-coffee

name: coffee

resources:

limits:

CPU: 100m

Memory: 123MiResulting container will be allowed to use 100 millicores and 123 mebibyte (~128 Megabytes)

CPU

One CPU core is equivalent to 1000m (one thousand millicpu or one thousand millicores)

CPU is always expressed as an absolute quantity, never as a relative quantity; 0.1 is the same amount of CPU on a single-core, dual-core, or 48-core machine

Memory

You can express memory as a plain integer or as a fixed-point integer using one of these suffixes: E, P, T, G, M, K. You can also use the power-of-two equivalents: Ei, Pi, Ti, Gi, Mi, Ki. For example, the following represent roughly the same value:

128974848, 129e6, 129M, 123MiMebibyte vs Megabyte

1 Megabyte (MB) = (1000)^2 bytes = 1000000 bytes.

1 Mebibyte (MiB) = (1024)^2 bytes = 1048576 bytes.

Requests

We can request a specific amount of CPU and Memory when the container starts up.

Suppose if the Java application need at least 128MB of memory during startup , we can use resource request in Pod spec.

This will help the scheduler to select a node with enough memory.

Request also can be made of CPU as well.

Lets modify the Pod spec and add request

apiVersion: v1

kind: Pod

metadata:

labels:

name: coffee-limits

spec:

containers:

- image: ansilh/demo-coffee

name: coffee

resources:

requests:

CPU: 100m

Memory: 123Mi

limits:

CPU: 200m

Memory: 244MiExtra

Once you complete the training , you can visit below URLs to understand storage and network limits.

Labels & Annotations

In this session , we will discuss the role of Labels and Annotations , also its role in fundamental k8s design.

Subsections of Labels & Annotations

Annotations

Why we need annotations ?

We can use either labels or annotations to attach metadata to Kubernetes objects. Labels can be used to select objects and to find collections of objects that satisfy certain conditions. In contrast, annotations are not used to identify and select objects. The metadata in an annotation can be small or large, structured or unstructured, and can include characters not permitted by labels.

Its just a place to store more metadata which is not used for any selection , grouping or operations.

Annotate Pod

Lets say , if you want to add a download URL to pod.

$ kubectl annotate pod coffee-app url=https://hub.docker.com/r/ansilh/demo-webapp

pod/coffee-app annotatedView annotations

k8s@k8s-master-01:~$ kubectl describe pod coffee-app

Name: coffee-app

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: k8s-worker-01/192.168.56.202

Start Time: Fri, 04 Jan 2019 00:47:10 +0530

Labels: app=frontend

run=coffee-app

Annotations: cni.projectcalico.org/podIP: 10.10.1.11/32

url: https://hub.docker.com/r/ansilh/demo-webapp

Status: Running

IP: 10.10.1.11

...Annotations filed containe two entries

cni.projectcalico.org/podIP: 10.10.1.11/32

url: https://hub.docker.com/r/ansilh/demo-webapp

Remove annotation

Use same annotate command and mention only key with a dash (-) at the end of the key .

Below command will remove the annotation url: https://hub.docker.com/r/ansilh/demo-webapp from Pod.

k8s@k8s-master-01:~$ kubectl annotate pod coffee-app url-

pod/coffee-app annotated

k8s@k8s-master-01:~$Annotation after removal.

k8s@k8s-master-01:~$ kubectl describe pod coffee-app

Name: coffee-app

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: k8s-worker-01/192.168.56.202

Start Time: Fri, 04 Jan 2019 00:47:10 +0530

Labels: app=frontend

run=coffee-app

Annotations: cni.projectcalico.org/podIP: 10.10.1.11/32

Status: Running

IP: 10.10.1.11Labels

Why we need labels ?

If you have a bucket of white dominos and you want to group it based on the number of dots.

Lets say we want all dominos with 10 dots; we will take domino one by one and if its having 10 dots ,we will put it aside and continue the same operation until all dominos were checked.

Likewise , suppose if you have 100 pods and few of them are nginx and few of them are centos , how we can see only nginx pods ?

We need a label on each pod so that we can tell kubectl command to show the pods with that label.

In kubernetes , label is a key value pair and it provides ‘identifying metadata’ for objects. These are fundamental qualities of objects that will be used for grouping , viewing and operating.

For now we will se how we can view them (Will discuss about grouping and operation on pod groups later)

Pod labels

Lets run a Coffee app Pod

k8s@k8s-master-01:~$ kubectl run coffee-app --image=ansilh/demo-coffee --restart=Never

pod/coffee-app created

k8s@k8s-master-01:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

coffee-app 1/1 Running 0 4s

k8s@k8s-master-01:~$See the labels of a Pods

k8s@k8s-master-01:~$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

coffee-app 1/1 Running 0 37s run=coffee-app

k8s@k8s-master-01:~$As you can see above , the lables is run=coffee-app which is a key value pair - key is run value is coffee-app.

When we run Pod imperatively , kubectl ass this label to Pod.

Add custom label to Pod

We can add label to Pod using kubectl label command.

k8s@k8s-master-01:~$ kubectl label pod coffee-app app=frontend

pod/coffee-app labeled

k8s@k8s-master-01:~$Here we have add a label app=frontend to pod coffee-app.

Use label selectors

Lets start another coffee application pod with name coffee-app02.

k8s@k8s-master-01:~$ kubectl run coffee-app02 --image=ansilh/demo-coffee --restart=Never

pod/coffee-app02 created

k8s@k8s-master-01:~$Now we have two Pods.

k8s@k8s-master-01:~$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

coffee-app 1/1 Running 0 5m5s app=frontend,run=coffee-app

coffee-app02 1/1 Running 0 20s run=coffee-app02

k8s@k8s-master-01:~$Lets see how can I select the Pods with label app=frontend.

k8s@k8s-master-01:~$ kubectl get pods --selector=app=frontend

NAME READY STATUS RESTARTS AGE

coffee-app 1/1 Running 0 6m52s

k8s@k8s-master-01:~$You can add as many as label you want.

We can add a prefix like app ( eg: app/dev=true ) which is also a valid label.

| Limitations | |

|---|---|

| Prefix | DNS subdomain with 256 characters |

| Key | 63 characters |

| Value | 63 characters |

Remove labels

See the labels of coffee-app

k8s@k8s-master-01:~$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

coffee-app 1/1 Running 0 28m app=frontend,run=coffee-app

coffee-app02 1/1 Running 0 24m run=coffee-app02Remove the app label

k8s@k8s-master-01:~$ kubectl label pod coffee-app app-

pod/coffee-app labeledResulting output

k8s@k8s-master-01:~$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

coffee-app 1/1 Running 0 29m run=coffee-app

coffee-app02 1/1 Running 0 24m run=coffee-app02

k8s@k8s-master-01:~$Taints and Tolerations

Taints and tolerations work together to ensure that pods are not scheduled onto inappropriate nodes.

Subsections of Taints and Tolerations

Taints

Why we need Taints ?

Just like labels , one or more taints can be applied to a node; this marks that the node should not accept any pods that do not tolerate the taints

$ kubectl taint node k8s-master-ah-01 node-role.kubernetes.io/master="":NoScheduleFormat key=value:Effect

Effects

NoSchedule - Pods will not be schedules

PreferNoSchedule- This is a “preference” or “soft” version of NoSchedule – the system will try to avoid placing a pod that does not tolerate the taint on the node, but it is not required.

NoExecute - pod will be evicted from the node (if it is already running on the node), and will not be scheduled onto the node (if it is not yet running on the node)

Tolerations

Why we need Tolerations ?

Tolerations can be specified on Pods Based on the taints on the nodes , Pods will scheduler will allow to run the Pod on the node.

Toleration syntax in Pod spec.

spec:

tolerations:

- key: node-role.kubernetes.io/master

effect: NoScheduleYAML Crash course

In this session we will learn k8s YAML specification and object types. We will cover only k8s dependent YAML specification

Subsections of YAML Crash course

Exploring Object Specs

So lets discuss about a new command kubectl explain so that we don’t have to remember all YAML specs of kubernetes objects.

With kubectl explain subcommand , you can see the specification of each objects and can use that as a reference to write your YAML files.

Fist level spec

We will use kubectl explain Pod command to see the specifications of a Pod YAML.

$ kubectl explain PodOutput

ubuntu@k8s-master-01:~$ kubectl explain pod

KIND: Pod

VERSION: v1

DESCRIPTION:

Pod is a collection of containers that can run on a host. This resource is

created by clients and scheduled onto hosts.

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#types-kinds

metadata <Object>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#metadata

spec <Object>

Specification of the desired behavior of the pod. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status

status <Object>

Most recently observed status of the pod. This data may not be up to date.

Populated by the system. Read-only. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status

ubuntu@k8s-master-01:~$As we discussed earlier , the specification is very familiar.

Filed status is readonly and its system populated , so we don’t have to write anything for status.

Exploring inner fields

If we want to see the fields available in spec , then execute below command.

$ kubectl explain pod.specKIND: Pod

VERSION: v1

RESOURCE: spec <Object>

DESCRIPTION:

Specification of the desired behavior of the pod. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status

PodSpec is a description of a pod.

FIELDS:

...

containers <[]Object> -required-

List of containers belonging to the pod. Containers cannot currently be

added or removed. There must be at least one container in a Pod. Cannot be

updated.

...How easy is that.

As you can see in spec the containers filed is -required- which indicates that this filed is mandatory.

<[]Object> indicates that its an array of objects , which means , you can put more than one element under containers

That make sense , because the Pod may contain more than one container.

In YAML we can use - infront of a filed to mark it as an array element.

Lets take a look at the YAML that we wrote earlier

apiVersion: v1

kind: Pod

metadata:

name: coffee-app01

labels:

app: frontend

run: coffee-app01

spec:

containers:

- name: demo-coffee

image: ansilh/demo-coffeeThere is a - under the fist filed of the containers.

If we say that in words ; “containers is an array object which contains one array element with filed name and image”

If you want to add one more container in Pod , we will add one more array element with needed values.

apiVersion: v1

kind: Pod

metadata:

name: coffee-app01

labels:

app: frontend

run: coffee-app01

spec:

containers:

- name: demo-coffee

image: ansilh/demo-coffee

- name: demo-tea

image: ansilh/demo-teaNow the Pod have two containers .

How I know the containers array element need name and image ?

We will use explain command to get those details.

$ kubectl explain pod.spec.containersSnipped Output

...

name <string> -required-

Name of the container specified as a DNS_LABEL. Each container in a pod

must have a unique name (DNS_LABEL). Cannot be updated.

image <string>

Docker image name

...As you can see , name and image are of type string which means , you have to provide a string value to it.

K8S YAML structure

What is YAML ?

Yet Another Markup Language

Kubernetes YAML have below structure

apiVersion:

kind:

metadata:

spec:apiVersion:

Kubernetes have different versions of API for each objects. We discuss about API in detail in upcoming sessions. For now , lets keep it simple as possible.

Pod is one of the kind of object which is part of core v1 API

So for a Pod, we usually see apiVersion: v1

kind:

As explained above we specify the kind of API object with kind: field.

metadata:

We have seen the use of metadata earlier.

As the name implies , we usually store name of object and labels in metadata field.

spec:

Object specification will go hear.

The specification will depend on the kind and apiVersion we use

Exploring Pod spec

Lets write a Pod specification YAML

apiVersion: v1

kind: Pod

metadata:

name: coffee-app01

labels:

app: frontend

run: coffee-app01

spec:

containers:

- name: demo-coffee

image: ansilh/demo-coffeeIn above specification , you can see that we have specified name and labels in matadata field.

The spec starts with cotainer field and we have added a container specification under it.

You might be wondering , how can we memories all these options. In reality , you don’t have to.

We will discuss about it in next session.

Services

Subsections of Services

Expose services in Pod

Service

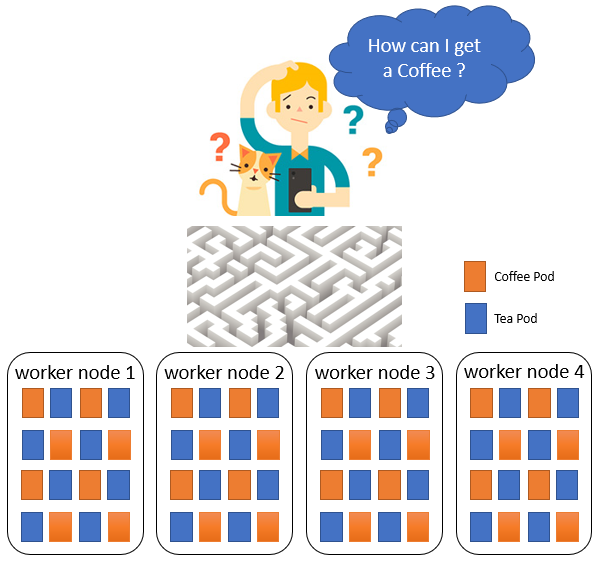

A Coffee Pod running in cluster and its listening on port 9090 on Pod’s IP. How can we expose that service to external world so that users can access it ?

We need to expose the service.

As we know , the Pod IP is not routable outside of the cluster. So we need a mechanism to reach the host’s port and then that traffic should be diverted to Pod’s port.

Lets create a Pod Yaml first.

$ vi coffe.yamlapiVersion: v1

kind: Pod

metadata:

name: coffee

spec:

containers:

- image: ansilh/demo-coffee

name: coffeeCreate Yaml

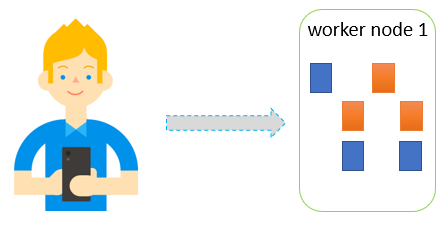

$ kubectl create -f coffe.yamlExpose the Pod with below command

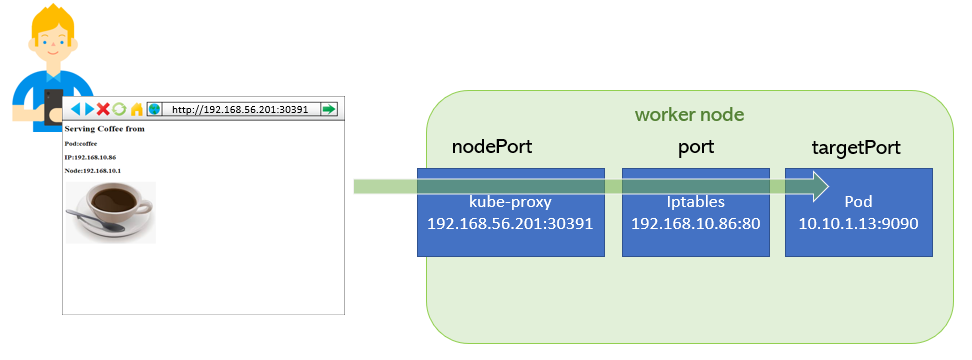

$ kubectl expose pod coffee --port=80 --target-port=9090 --type=NodePortThis will create a Service object in kubernetes , which will map the Node’s port 30836 to Service IP/Port 192.168.10.86:80

We can see the derails using kubectl get service command

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

coffee NodePort 192.168.10.86 <none> 80:30391/TCP 6s

kubernetes ClusterIP 192.168.10.1 <none> 443/TCP 26hWe can also see that the port is listening and kube-proxy is the one listening on that port.

$ sudo netstat -tnlup |grep 30836

tcp6 0 0 :::30391 :::* LISTEN 2785/kube-proxyNow you can open browser and access the Coffee app using URL http://192.168.56.201:30391

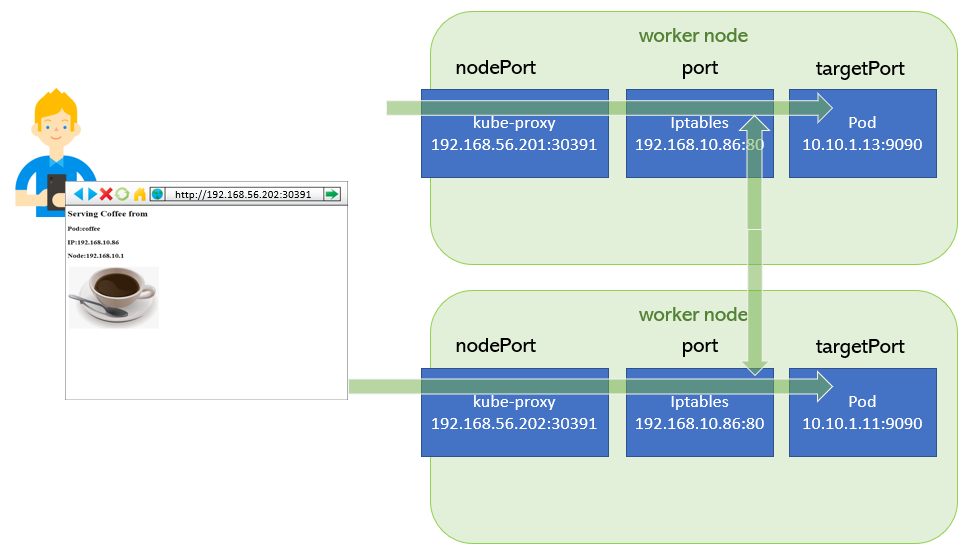

Ports in Service Objects

nodePort

This setting makes the service visible outside the Kubernetes cluster by the node’s IP address and the port number declared in this property. The service also has to be of type NodePort (if this field isn’t specified, Kubernetes will allocate a node port automatically).

port

Expose the service on the specified port internally within the cluster. That is, the service becomes visible on this port, and will send requests made to this port to the pods selected by the service.

targetPort

This is the port on the pod that the request gets sent to. Your application needs to be listening for network requests on this port for the service to work.

NodePort

NodePort Exposes the service on each Node’s IP at a static port (the NodePort). A ClusterIP service, to which the NodePort service will route, is automatically created. You’ll be able to contact the NodePort service, from outside the cluster, by requesting

How nodePort works

kube-proxy watches the Kubernetes master for the addition and removal of Service and Endpoints objects.

(We will discuss about Endpoints later in this session.)

For each Service, it opens a port (randomly chosen) on the local node. Any connections to this “proxy port” will be proxied to one of the Service’s backend Pods (as reported in Endpoints). Lastly, it installs iptables rules which capture traffic to the Service’s clusterIP (which is virtual) and Port and redirects that traffic to the proxy port which proxies the backend Pod.

nodePort workflow.

nodePort->30391port->80targetPort->9090

ClusterIP

It exposes the service on a cluster-internal IP.

When we expose a pod using kubectl expose command , we are creating a service object in API.

Choosing this value makes the service only reachable from within the cluster. This is the default ServiceType.

We can see the Service spec using --dry-run & --output=yaml

$ kubectl expose pod coffee --port=80 --target-port=9090 --type=ClusterIP --dry-run --output=yaml

Output

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

run: coffee

name: coffee

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9090

selector:

run: coffee

type: ClusterIP

status:

loadBalancer: {}Cluster IP service is useful when you don’t want to expose the service to external world. eg:- database service.

With service names , a frontend tier can access the database backend without knowing the IPs of the Pods.

CoreDNS (kube-dns) will dynamically create a service DNS entry and that will be resolvable from Pods.

Verify Service DNS

Start debug-tools container which is an alpine linux image with network related binaries

$ kubectl run debugger --image=ansilh/debug-tools --restart=Never$ kubectl exec -it debugger -- /bin/sh

/ # nslookup coffee

Server: 192.168.10.10

Address: 192.168.10.10#53

Name: coffee.default.svc.cluster.local

Address: 192.168.10.86

/ # nslookup 192.168.10.86

86.10.168.192.in-addr.arpa name = coffee.default.svc.cluster.local.

/ #coffee.default.svc.cluster.local

^ ^ ^ k8s domain

| | | |-----------|

| | +--------------- Indicates that its a service

| +---------------------- Namespace

+----------------------------- Service NameLoadBalancer

Exposes the service externally using a cloud provider’s load balancer. NodePort and ClusterIP services, to which the external load balancer will route, are automatically created.

We will discuss more about this topic later in this training.

Endpoints

Pods behind a service.

Lets

Lets describe the service to see how the mapping of Pods works in a service object.

(Yes , we are slowly moving from general wordings to pure kubernetes terms)

$ kubectl describe service coffee

Name: coffee

Namespace: default

Labels: run=coffee

Annotations: <none>

Selector: run=coffee

Type: NodePort

IP: 192.168.10.86

Port: <unset> 80/TCP

TargetPort: 9090/TCP

NodePort: <unset> 30391/TCP

Endpoints: 10.10.1.13:9090

Session Affinity: None

External Traffic Policy: ClusterHere the label run=coffee is the one which creates the mapping from service to Pod.

Any pod with label run=coffee will be mapped under this service.

Those mappings are called Endpoints.

Lets see the endpoints of service coffee

$ kubectl get endpoints coffee

NAME ENDPOINTS AGE

coffee 10.10.1.13:9090 3h48mAs of now only one pod endpoint is mapped under this service.

lets create one more Pod with same label and see how it affects endpoints.

$ kubectl run coffee01 --image=ansilh/demo-coffee --restart=Never --labels=run=coffeeNow we have one more Pod

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

coffee 1/1 Running 0 15h

coffee01 1/1 Running 0 6sLets check the endpoint

$ kubectl get endpoints coffee

NAME ENDPOINTS AGE

coffee 10.10.1.13:9090,10.10.1.19:9090 3h51mNow we have two Pod endpoints mapped to this service. So the requests comes to coffee service will be served from these pods in a round robin fashion.

Multi-Container Pods

In this session we will create Pods with more than one containers and few additional features in k8s.

Subsections of Multi-Container Pods

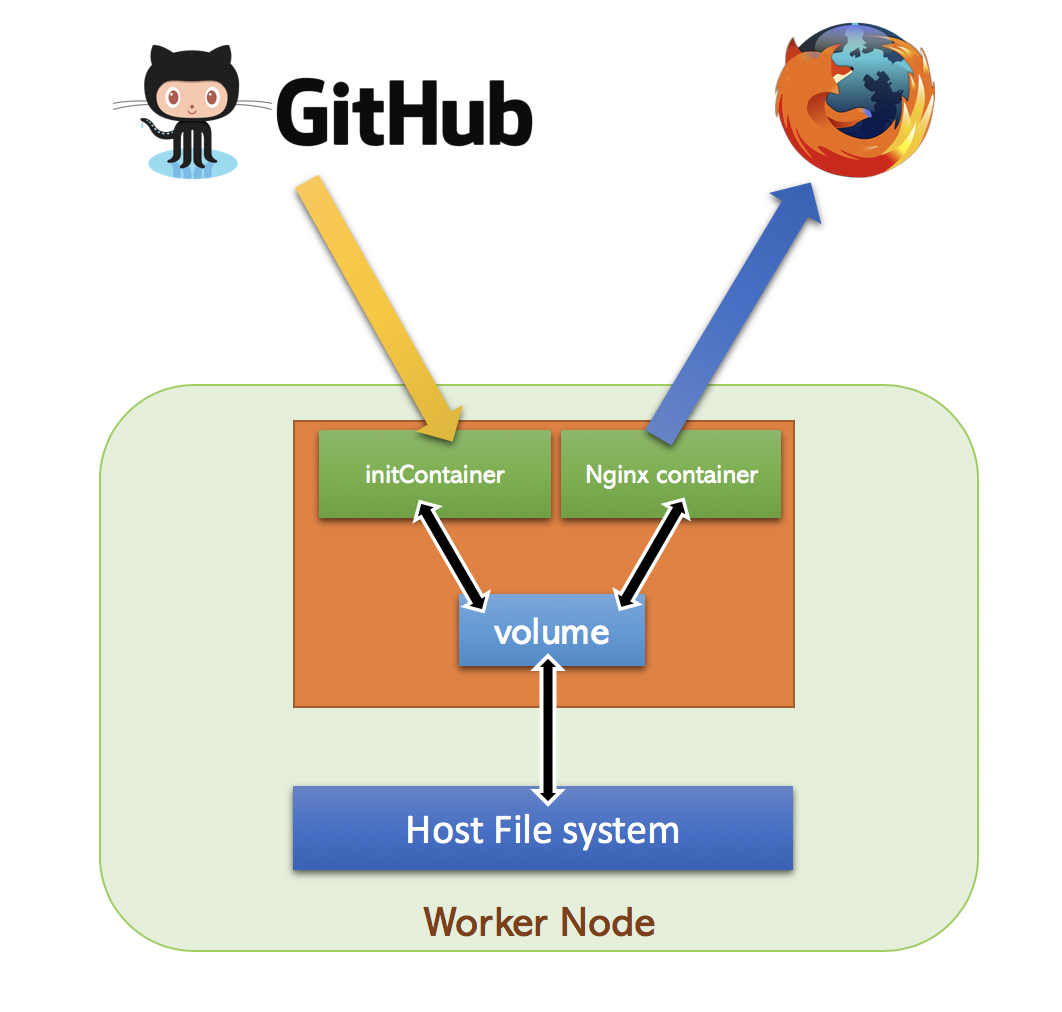

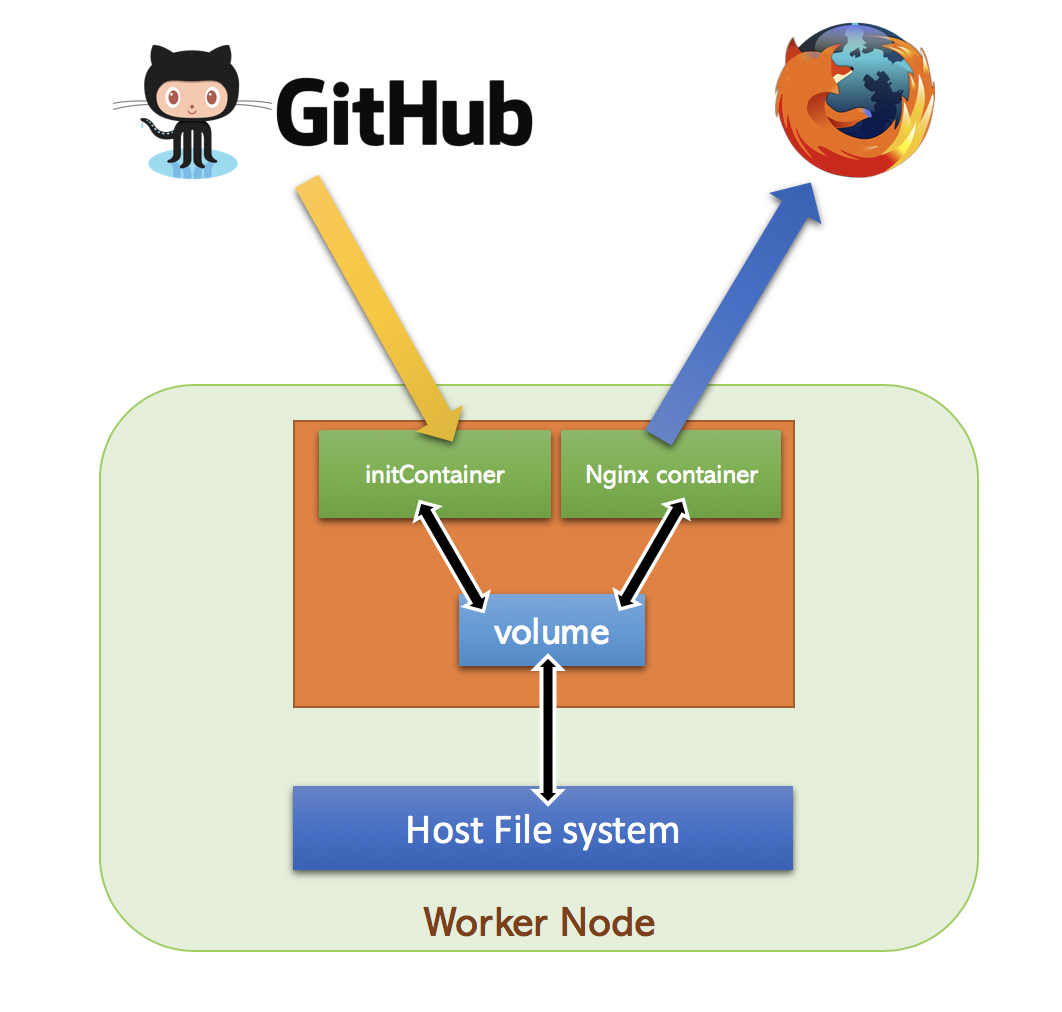

InitContainer

In this session , we will discuss about InitContainer

In this session , we will discuss about InitContainer

Non-persistent web server

As we already know ,containers are ephemeral and the modifications will be lost when container is destroyed.

In this example , we will download webpages from Github repository and store it in a emptyDir volume.

From this emptyDir volume , we will serve the HTML pages using an Nginx Pod

emptyDir is a volume type , just like hostPath , but the contents of emptyDir will be destroyed when Pod is stopped.

So lets write a Pod specification for Nginx container and add InitContainer to download HTML page

apiVersion: v1

kind: Pod

metadata:

labels:

run: demo-web

name: demo-web

spec:

volumes:

- name: html

emptyDir: {}

containers:

- image: nginx

name: demo-web

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

initContainers:

- image: ansilh/debug-tools

name: git-pull

args:

- git

- clone

- https://github.com/ansilh/k8s-demo-web.git

- /html/.

volumeMounts:

- name: html

mountPath: /html/Problem with this design is , no way to pull the changes once Pod is up. InitContainer run only once during the startup of the Pod.

Incase of InitContainer failure , Pod startup will fail and never start other containers.

We can specify more than one initcontainer if needed. Startup of initcontainer will be sequential and the order will be selected based on the order in yaml spec.

In next session , we will discuss about other design patterns for Pod.

Inject data to Pod

Inject data to pod via Environmental variable

We will create a Coffee Pod

$ kubectl run tea --image=ansilh/demo-tea --env=MY_NODE_NAME=scratch --restart=Never --dry-run -o yaml >pod-with-env.yamlapiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: tea

name: tea

spec:

containers:

- env:

- name: MY_NODE_NAME

value: scratch

image: ansilh/demo-tea

name: coffee-new

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Never

status: {}Lets run this Pod

$ kubectl create -f pod-with-env.yaml$ kubectl get pods

NAME READY STATUS RESTARTS AGE

tea 1/1 Running 0 7sLets expose the pod as NodePort

$ kubectl expose pod tea --port=80 --target-port=8080 --type=NodePort$ kubectl get svc tea

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

tea NodePort 192.168.10.37 <none> 80:32258/TCP 42sAccess the service using browser uisng node IP and port 32258

You will see below in Page

Node:scratch

Expose Pod fields to containers

Lets extract the nodeName from spec ( Excuse me ? yeah we will see that in a moment )

k8s@k8s-master-01:~$ kubectl get pods tea -o=jsonpath='{.spec.nodeName}' && echo

k8s-worker-01

k8s@k8s-master-01:~$ kubectl get pods tea -o=jsonpath='{.status.hostIP}' && echo

192.168.56.202

k8s@k8s-master-01:~$ kubectl get pods tea -o=jsonpath='{.status.podIP}' && echo

10.10.1.23

k8s@k8s-master-01:~$To get the JSON path , first we need to get the entire object output in JSON.

We have used output in YAML so far because its easy . But internally kubectl convers YAML to JSON

$ kubectl get pod tea -o json{

"apiVersion": "v1",

"kind": "Pod",

"metadata": {

"annotations": {

"cni.projectcalico.org/podIP": "10.10.1.23/32"

},

"creationTimestamp": "2019-01-06T15:09:36Z",

"labels": {

"run": "tea"

},

"name": "tea",

"namespace": "default",

"resourceVersion": "218696",

"selfLink": "/api/v1/namespaces/default/pods/tea",

"uid": "14c1715b-11c5-11e9-9f0f-0800276a1bd2"

},

"spec": {

"containers": [

{

"env": [

{

"name": "MY_NODE_NAME",

"value": "scratch"

}

],

"image": "ansilh/demo-tea",

"imagePullPolicy": "Always",

"name": "coffee-new",

"resources": {},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"volumeMounts": [

{

"mountPath": "/var/run/secrets/kubernetes.io/serviceaccount",

"name": "default-token-72pzg",

"readOnly": true

}

]

}

],

"dnsPolicy": "ClusterFirst",

"enableServiceLinks": true,

"nodeName": "k8s-worker-01",

"priority": 0,

"restartPolicy": "Never",

"schedulerName": "default-scheduler",

"securityContext": {},

"serviceAccount": "default",

"serviceAccountName": "default",

"terminationGracePeriodSeconds": 30,

"tolerations": [

{

"effect": "NoExecute",

"key": "node.kubernetes.io/not-ready",

"operator": "Exists",

"tolerationSeconds": 300

},

{

"effect": "NoExecute",

"key": "node.kubernetes.io/unreachable",

"operator": "Exists",

"tolerationSeconds": 300

}

],

"volumes": [

{

"name": "default-token-72pzg",

"secret": {

"defaultMode": 420,

"secretName": "default-token-72pzg"

}

}

]

},

"status": {

"conditions": [

{

"lastProbeTime": null,

"lastTransitionTime": "2019-01-06T15:09:36Z",

"status": "True",

"type": "Initialized"

},

{

"lastProbeTime": null,

"lastTransitionTime": "2019-01-06T15:09:42Z",

"status": "True",

"type": "Ready"

},

{

"lastProbeTime": null,

"lastTransitionTime": "2019-01-06T15:09:42Z",

"status": "True",

"type": "ContainersReady"

},

{

"lastProbeTime": null,

"lastTransitionTime": "2019-01-06T15:09:36Z",

"status": "True",

"type": "PodScheduled"

}

],

"containerStatuses": [

{

"containerID": "docker://291a72e7fdab6a9f7afc47c640126cf596f5e071903b6a9055b44ef5bcb1c104",

"image": "ansilh/demo-tea:latest",

"imageID": "docker-pullable://ansilh/demo-tea@sha256:998d07a15151235132dae9781f587ea4d2822c62165778570145b0f659dda7bb",

"lastState": {},

"name": "coffee-new",

"ready": true,

"restartCount": 0,

"state": {

"running": {

"startedAt": "2019-01-06T15:09:42Z"

}

}

}

],

"hostIP": "192.168.56.202",

"phase": "Running",

"podIP": "10.10.1.23",

"qosClass": "BestEffort",

"startTime": "2019-01-06T15:09:36Z"

}

}Remove below from pod-with-env.yaml

- name: MY_NODE_NAME

value: scratchAdd below Pod spec

- name: MY_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeNameResulting Pod Yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: tea

name: tea

spec:

containers:

- env:

- name: MY_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

image: ansilh/demo-tea

name: coffee-new

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Never

status: {}Delete the running pod files

$ kubectl delete pod teaCreate the pod with modified yaml file

$ kubectl create -f pod-with-env.yamlMake sure endpoint is up in service

$ kubectl get ep tea

NAME ENDPOINTS AGE

tea 10.10.1.26:8080 31mRefresh the browser page. This time you will see Node:k8s-worker-01

Lets do a cleanup on default namespace.

$ kubectl delete --all pods

$ kubectl delete --all servicesNow you know

- How to use export Objects in Yaml and Json format

- How to access each fields using

jsonpath - How to inject environmental variables to

Pod - How to inject system generated fields to

Podusing environmental variables

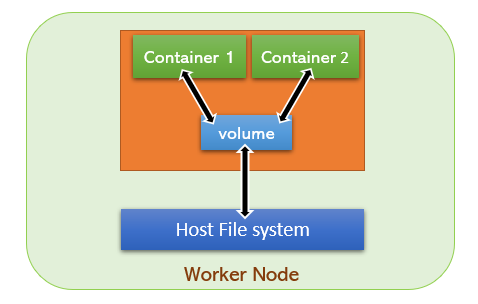

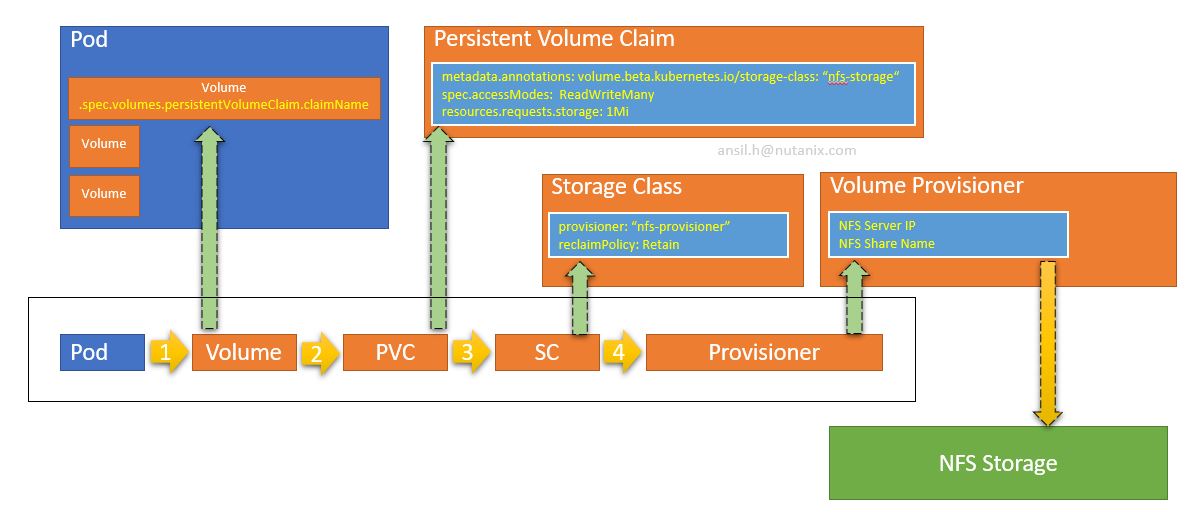

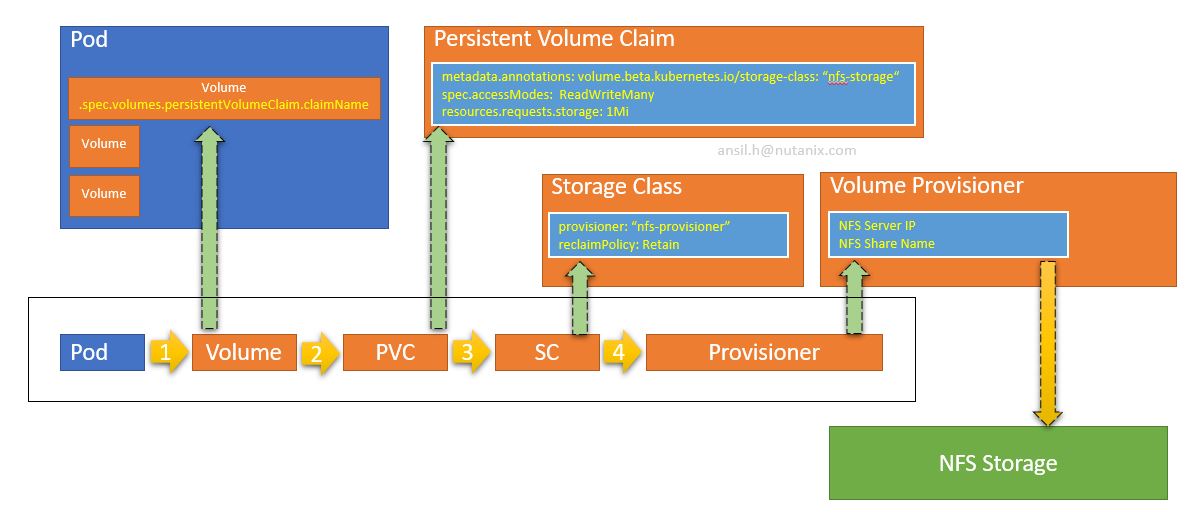

Introduction to Volumes

Persistent volumes

When a Pod dies , all container’s contents will be destroyed and never preserved by default. Sometimes you need to store the contents persistently (for eg:- etcd pod)

Kubernetes have a Volumes filed in Pod spec , which can be used to mount a volume inside container.

Lets explain the volume specs

$ kubectl explain pod.spec.volumesSo when you write Yaml , you have to put volumes object in spec. As we have seen , volumes type is <[]Object> ; means its an array

So the contents below volumes should start with a dash “-”. Name is a mandatory field , so lets write those.

spec:

volumes:

- name: "data"We will use hostPath for now

$ kubectl explain pod.spec.volumes.hostPath

KIND: Pod

VERSION: v1

RESOURCE: hostPath <Object>

DESCRIPTION:

HostPath represents a pre-existing file or directory on the host machine

that is directly exposed to the container. This is generally used for

system agents or other privileged things that are allowed to see the host

machine. Most containers will NOT need this. More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

Represents a host path mapped into a pod. Host path volumes do not support

ownership management or SELinux relabeling.

FIELDS:

path <string> -required-

Path of the directory on the host. If the path is a symlink, it will follow

the link to the real path. More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

type <string>

Type for HostPath Volume Defaults to "" More info:

https://kubernetes.io/docs/concepts/storage/volumes#hostpath

k8s@k8s-master-01:~$Host path needs a path on the host , so lets add that as well to the spec

spec:

volumes:

- name: "data"

hostPath:

path: "/var/data"This will add a volume to Pod

Now we have to tell the pods to use it.

In containers specification, we have volumeMounts field which can be used to mount the volume.

$ kubectl explain pod.spec.containers.volumeMounts

KIND: Pod

VERSION: v1

RESOURCE: volumeMounts <[]Object>

DESCRIPTION:

Pod volumes to mount into the container's filesystem. Cannot be updated.

VolumeMount describes a mounting of a Volume within a container.

FIELDS:

mountPath <string> -required-

Path within the container at which the volume should be mounted. Must not

contain ':'.

mountPropagation <string>

mountPropagation determines how mounts are propagated from the host to

container and the other way around. When not set, MountPropagationNone is

used. This field is beta in 1.10.

name <string> -required-

This must match the Name of a Volume.

readOnly <boolean>

Mounted read-only if true, read-write otherwise (false or unspecified).

Defaults to false.

subPath <string>

Path within the volume from which the container's volume should be mounted.

Defaults to "" (volume's root).volumeMounts is <[]Object> . mountPath is required and name

name must match the Name of a Volume

Resulting Pod spec will become ;

spec:

volumes:

- name: "data"

hostPath:

path: "/var/data"

containers:

- name: nginx

image: nginx

volumeMounts:

- name: "data"

mountPath: "/usr/share/nginx/html"Lets add the basic fields to complete the Yaml and save the file as nginx.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod01

spec:

volumes:

- name: "data"

hostPath:

path: "/var/data"

containers:

- name: nginx

image: nginx

volumeMounts:

- name: "data"

mountPath: "/usr/share/nginx/html"Create the Pod

kubectl create -f nginx.yamlCheck where its running.

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod01 1/1 Running 0 55s 10.10.1.27 k8s-worker-01 <none> <none>Lets expose this Pod first.

$ kubectl expose pod nginx-pod01 --port=80 --target-port=80 --type=NodePorterror: couldn't retrieve selectors via --selector flag or introspection: the pod has no labels and cannot be exposed

See 'kubectl expose -h' for help and examples.This indicates that we didn’t add label , because the service needs a label to map the Pod to endpoint

Lets add a label to the Pod.

$ kubectl label pod nginx-pod01 run=nginx-pod01Now we can we can expose the Pod

$ kubectl expose pod nginx-pod01 --port=80 --target-port=80 --type=NodePortGet the node port which service is listening to

$ kubectl get svc nginx-pod01

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-pod01 NodePort 192.168.10.51 <none> 80:31538/TCP 26sYou will get 403 Forbidden page , because there is no html page to load.

Now we can go to the node where the Pod is running and check the path /var/data

k8s@k8s-worker-01:~$ ls -ld /var/data

drwxr-xr-x 2 root root 4096 Jan 7 00:52 /var/data

k8s@k8s-worker-01:~$ cd /var/data

k8s@k8s-worker-01:/var/data$ ls -lrt

total 0

k8s@k8s-worker-01:/var/data$

Nothing is there.The directory is owned by root , so you have to create the file index.html with root.

k8s@k8s-worker-01:/var/data$ sudo -i

[sudo] password for k8s:

root@k8s-worker-01:~# cd /var/data

root@k8s-worker-01:/var/data#

root@k8s-worker-01:/var/data# echo "This is a test page" >index.html

root@k8s-worker-01:/var/data#

Reload the web page and you should see “This is a test page”

Now you know;

- How to create a volume.

- How to mount a volume.

- How to access the contents of volume from host.

Pod - manual scheduling

Node Selector

Suppose you have a Pod which needs to be running on a Pod which is having SSD in it.

First we need to add a label to the node which is having SSD

$ kubectl label node k8s-worker-01 disktype=ssdNow we can write a Pod spec with nodeSelector

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

nodeSelector:

disktype: ssdScheduler will look at the node selector and select apropriate node to run the pod

nodeName

- Kube-scheduler will find a suitable pod by evaluating the constraints.

- Scheduler will modify the value of .spec.nodeName of Pod object .

- kubelet will observe the change via API server and will start the pod based on the specification.

This means , we can manually specify the nodeName in Pod spec and schedule it.

You can read more about nodeName in below URL

https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#nodename

Pod design patterns

When the containers have the exact same lifecycle, or when the containers must run on the same node. The most common scenario is that you have a helper process that needs to be located and managed on the same node as the primary container.

Another reason to combine containers into a single pod is for simpler communication between containers in the pod. These containers can communicate through shared volumes (writing to a shared file or directory) and through inter-process communication (semaphores or shared memory).

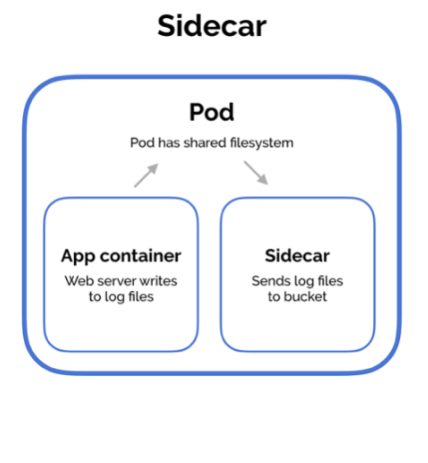

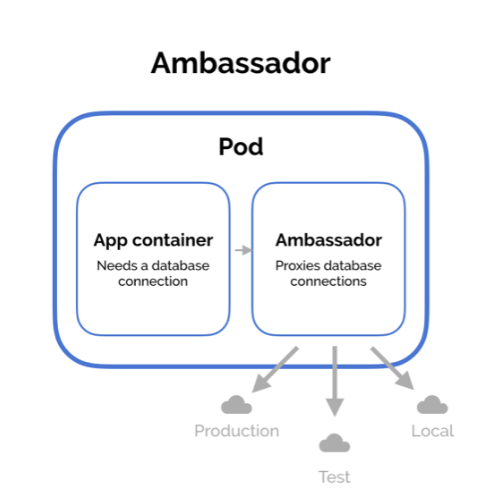

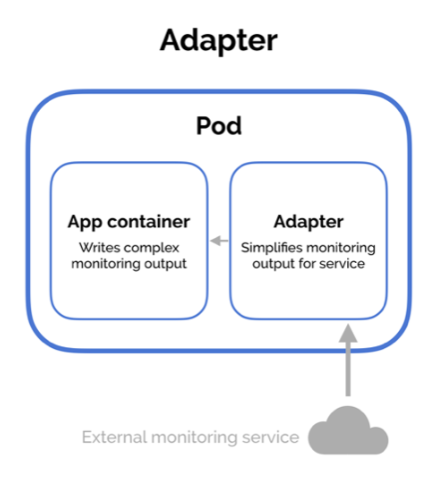

There are three common design patterns and use-cases for combining multiple containers into a single pod. We’ll walk through the sidecar pattern, the adapter pattern, and the ambassador pattern.

Example #1: Sidecar containers

Sidecar containers extend and enhance the “main” container, they take existing containers and make them better. As an example, consider a container that runs the Nginx web server. Add a different container that syncs the file system with a git repository, share the file system between the containers and you have built Git push-to-deploy.

apiVersion: v1

kind: Pod

metadata:

labels:

run: demo-web

name: demo-web

spec:

volumes:

- name: html

emptyDir: {}

containers:

- image: nginx

name: demo-web

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

- image: ansilh/debug-tools

name: git-pull

args:

- sh

- -c

- 'while true ; do [ ! -d /html/.git ] && git clone https://github.com/ansilh/k8s-demo-web.git /html/ || { cd /html; git pull; } ; date; sleep 5 ; done'

volumeMounts:

- name: html

mountPath: /html/Lets do a tail on the logs and see how the git-pull works

$ kubectl logs demo-web git-pull -f