Chapter 20

Subsections of ELK Stack

Elastic Search

We will create volumes with hostpath for testing purposes. In production , we will use PVs from a volume provisioner or we will use dynamic volume provisioning

- Create volumes according to the number of nodes

cat <<EOF >es-volumes-manual.yaml

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv-001

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/tmp/data01"

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv-002

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/tmp/data02"

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv-003

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/tmp/data03"

---

EOF$ kubectl create -f es-volumes-manual.yaml$ kubectl get pvOutput

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-001 50Gi RWO Retain Available manual 8s

pv-002 50Gi RWO Retain Available manual 8s

pv-003 50Gi RWO Retain Available manual 8s

Create a namespace

cat <<EOF >kube-logging.yaml

kind: Namespace

apiVersion: v1

metadata:

name: kube-logging

EOFkubectl create -f kube-logging.yaml- Create a headless service

cat <<EOF >es-service.yaml

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: kube-logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

EOFkubectl create -f es-service.yamlCreate stateful set

cat <<EOF >es_statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: kube-logging

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch-oss:6.4.3

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.zen.ping.unicast.hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

initContainers:

- name: fix-permissions

image: busybox

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: manual

resources:

requests:

storage: 50Gi

EOFkubectl create -f es_statefulset.yaml- Montor StatefulSet rollout status

kubectl rollout status sts/es-cluster --namespace=kube-logging- Verify elastic search cluster by checking the state

Forward the pod port 9200 to localhost port 9200

$ kubectl port-forward es-cluster-0 9200:9200 --namespace=kube-loggingExecute curl command to see the cluster state.

Here , master node is 'J0ZQqGI0QTqljoLxh5O3-A' , which is es-cluster-0

curl http://localhost:9200/_cluster/state?pretty

{

"cluster_name" : "k8s-logs",

"compressed_size_in_bytes" : 358,

"cluster_uuid" : "ahM0thu1RSKQ5CXqZOdPHA",

"version" : 3,

"state_uuid" : "vDwLQHzJSGixU2AItNY1KA",

"master_node" : "J0ZQqGI0QTqljoLxh5O3-A",

"blocks" : { },

"nodes" : {

"jZdz75kSSSWDpkIHYoRFIA" : {

"name" : "es-cluster-1",

"ephemeral_id" : "flfl4-TURLS_yTUOlZsx5g",

"transport_address" : "10.10.151.186:9300",

"attributes" : { }

},

"J0ZQqGI0QTqljoLxh5O3-A" : {

"name" : "es-cluster-0",

"ephemeral_id" : "qXcnM2V1Tcqbw1cWLKDkSg",

"transport_address" : "10.10.118.123:9300",

"attributes" : { }

},

"pqGu-mcNQS-OksmiJfCUJA" : {

"name" : "es-cluster-2",

"ephemeral_id" : "X0RtmusQS7KM5LOy9wSF3Q",

"transport_address" : "10.10.36.224:9300",

"attributes" : { }

}

},

snippned..Kibana

cat <<EOF >kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

ports:

- port: 5601

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-logging

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana-oss:6.4.3

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

ports:

- containerPort: 5601

EOFkubectl create -f kibana.yamlkubectl rollout status deployment/kibana --namespace=kube-loggingOutput

Waiting for deployment "kibana" rollout to finish: 0 of 1 updated replicas are available...

deployment "kibana" successfully rolled out

$ kubectl get pods --namespace=kube-loggingOutput

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 0 21m

es-cluster-1 1/1 Running 0 20m

es-cluster-2 1/1 Running 0 19m

kibana-87b7b8cdd-djbl4 1/1 Running 0 72s$ kubectl port-forward kibana-87b7b8cdd-djbl4 5601:5601 --namespace=kube-loggingYou may use PuTTY tunneling to access the 127.0.0.1:5601 port or you can use ssh command tunneling if you are using Mac or Linux

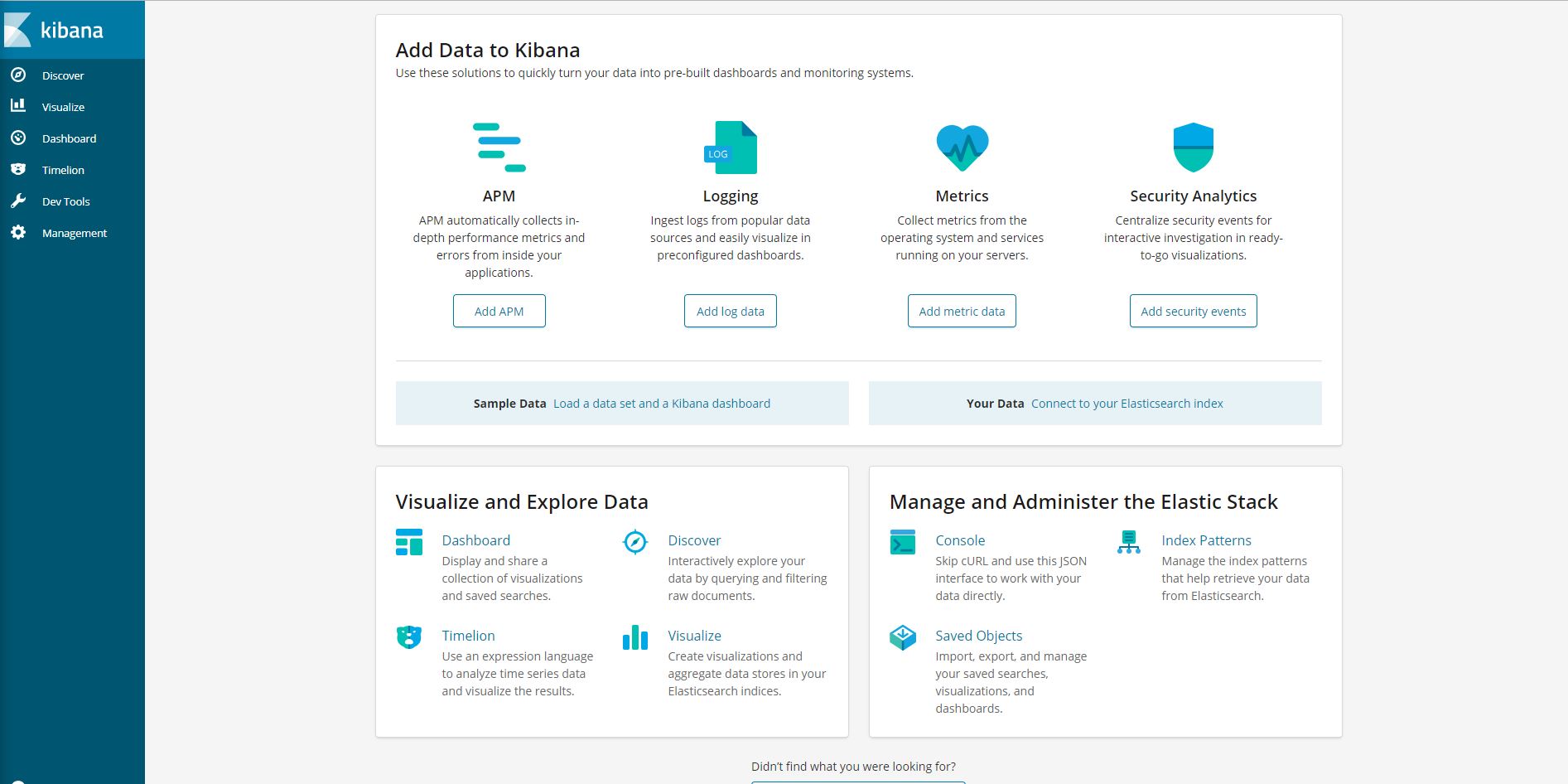

After accessing the URL http://localhost:5601 via browser , you will see the Kibana web interface

Send logs via Fluent-bit

cat <<EOF >fluent-bit-service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluent-bit

namespace: kube-logging

EOF$ kubectl create -f fluent-bit-service-account.yamlOutput

serviceaccount/fluent-bit createdcat <<EOF >fluent-bit-role.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: fluent-bit-read

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

verbs:

- get

- list

- watch

EOF$ kubectl create -f fluent-bit-role.yamlOutput

clusterrole.rbac.authorization.k8s.io/fluent-bit-read createdcat <<EOF >fluent-bit-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: fluent-bit-read

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: fluent-bit-read

subjects:

- kind: ServiceAccount

name: fluent-bit

namespace: kube-logging

EOF$ kubectl create -f fluent-bit-role-binding.yamlOutput

clusterrolebinding.rbac.authorization.k8s.io/fluent-bit-read createdcat <<EOF >fluent-bit-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: fluent-bit-config

namespace: kube-logging

labels:

k8s-app: fluent-bit

data:

# Configuration files: server, input, filters and output

# ======================================================

fluent-bit.conf: |

[SERVICE]

Flush 1

Log_Level info

Daemon off

Parsers_File parsers.conf

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port 2020

@INCLUDE input-kubernetes.conf

@INCLUDE filter-kubernetes.conf

@INCLUDE output-elasticsearch.conf

input-kubernetes.conf: |

[INPUT]

Name tail

Tag kube.*

Path /var/log/containers/*.log

Parser docker

DB /var/log/flb_kube.db

Mem_Buf_Limit 5MB

Skip_Long_Lines On

Refresh_Interval 10

filter-kubernetes.conf: |

[FILTER]

Name kubernetes

Match kube.*

Kube_URL https://kubernetes.default:443

Merge_Log On

K8S-Logging.Parser On

output-elasticsearch.conf: |

[OUTPUT]

Name es

Match *

Host \${FLUENT_ELASTICSEARCH_HOST}

Port \${FLUENT_ELASTICSEARCH_PORT}

Logstash_Format On

Retry_Limit False

parsers.conf: |

[PARSER]

Name apache

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache2

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^ ]*) +\S*)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache_error

Format regex

Regex ^\[[^ ]* (?<time>[^\]]*)\] \[(?<level>[^\]]*)\](?: \[pid (?<pid>[^\]]*)\])?( \[client (?<client>[^\]]*)\])? (?<message>.*)$

[PARSER]

Name nginx

Format regex

Regex ^(?<remote>[^ ]*) (?<host>[^ ]*) (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name json

Format json

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name docker

Format json

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

# Command | Decoder | Field | Optional Action

# =============|==================|=================

Decode_Field_As escaped log

[PARSER]

Name syslog

Format regex

Regex ^\<(?<pri>[0-9]+)\>(?<time>[^ ]* {1,2}[^ ]* [^ ]*) (?<host>[^ ]*) (?<ident>[a-zA-Z0-9_\/\.\-]*)(?:\[(?<pid>[0-9]+)\])?(?:[^\:]*\:)? *(?<message>.*)$

Time_Key time

Time_Format %b %d %H:%M:%S

EOF$ kubectl create -f fluent-bit-configmap.yamlOutput

configmap/fluent-bit-config createdcat <<EOF >fluent-bit-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: fluent-bit

namespace: kube-logging

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

template:

metadata:

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "2020"

prometheus.io/path: /api/v1/metrics/prometheus

spec:

containers:

- name: fluent-bit

image: fluent/fluent-bit:1.0.4

imagePullPolicy: Always

ports:

- containerPort: 2020

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: fluent-bit-config

mountPath: /fluent-bit/etc/

terminationGracePeriodSeconds: 10

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: fluent-bit-config

configMap:

name: fluent-bit-config

serviceAccountName: fluent-bit

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

EOF$ kubectl create -f fluent-bit-ds.yamlOutput

daemonset.extensions/fluent-bit created