Persistent Volumes

In this session , we will discuss about Persistent volumes and Persistent volume claims.

In this session , we will discuss about Persistent volumes and Persistent volume claims.

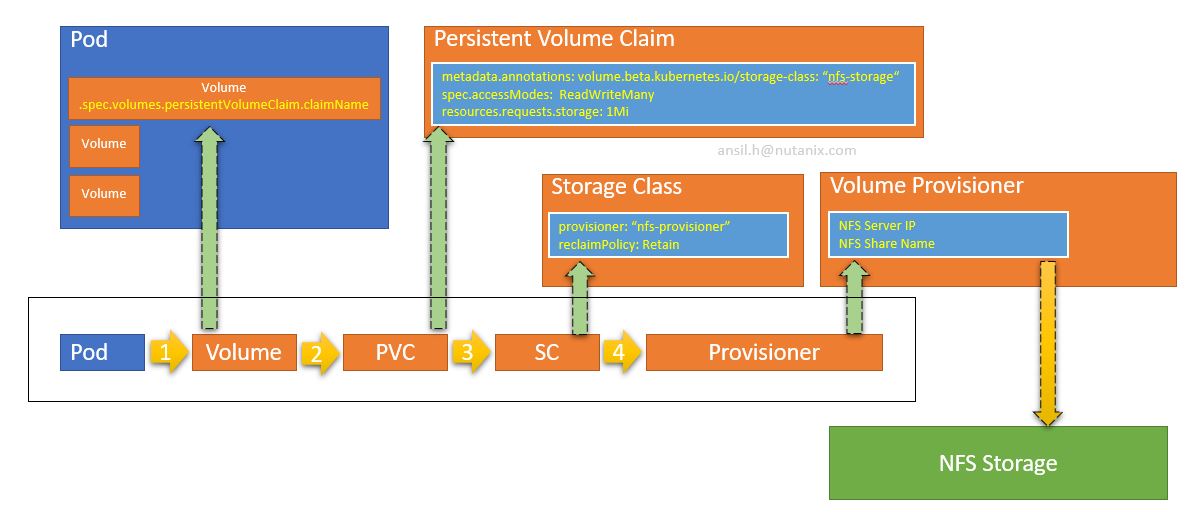

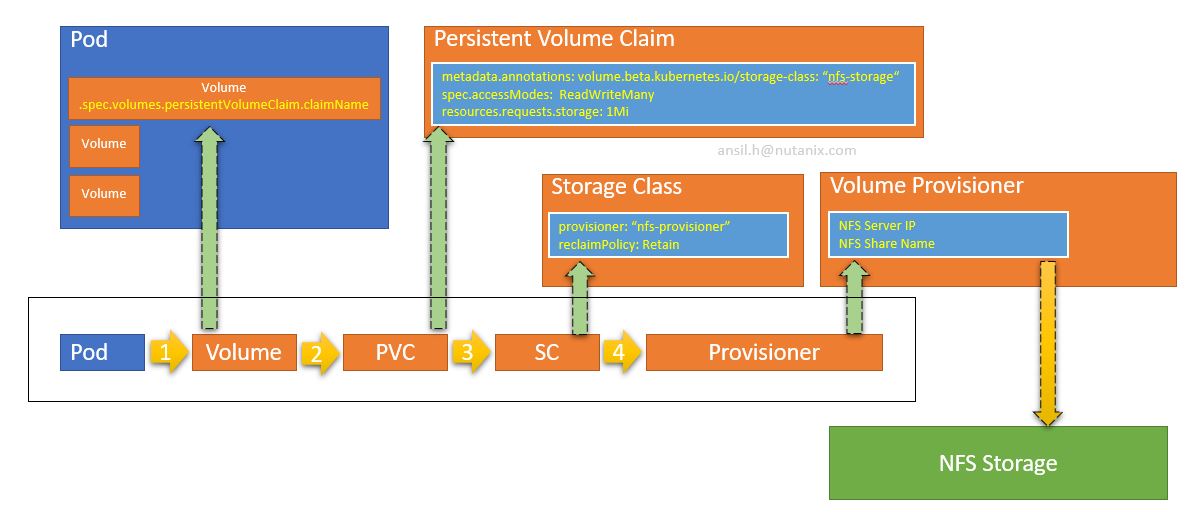

The PersistentVolume subsystem provides an API for users and administrators that abstracts details of how storage is provided from how it is consumed.

To do this we introduce two new API resources: PersistentVolume and PersistentVolumeClaim

A PersistentVolume (PV) is a piece of storage in the cluster that has been provisioned by an administrator.

It is a resource in the cluster just like a node is a cluster resource.

PVs are volume plugins like Volumes, but have a lifecycle independent of any individual pod that uses the PV.

This API object captures the details of the implementation of the storage, be that NFS, iSCSI, or a cloud-provider-specific storage system.

A PersistentVolumeClaim (PVC) is a request for storage by a user.

It is similar to a pod. Pods consume node resources and PVCs consume PV resources.

Pods can request specific levels of resources (CPU and Memory).

Claims can request specific size and access modes (e.g., can be mounted once read/write or many times read-only).

A StorageClass provides a way for administrators to describe the “classes” of storage they offer.

Different classes might map to quality-of-service levels, or to backup policies, or to arbitrary policies determined by the cluster administrators.

Kubernetes itself is unopinionated about what classes represent. This concept is sometimes called “profiles” in other storage systems.

Lets follow a basic example outlined below

https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

Lets follow a basic example outlined below

https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/

Create a VM with two disks (50GB each)

Install CentOS 7 with minimal ISO with default option. Select only first disk for installation

Disable SELinux

# grep disabled /etc/sysconfig/selinuxOutput

SELINUX=disabledsystemctl disable firewalld

systemctl stop firewalldsetenforce 0taregtdyum install targetdtargetdvgcreate vg-targetd /dev/sdbvi /etc/target/targetd.yamlpassword: nutanix

# defaults below; uncomment and edit

# if using a thin pool, use <volume group name>/<thin pool name>

# e.g vg-targetd/pool

pool_name: vg-targetd

user: admin

ssl: false

target_name: iqn.2003-01.org.linux-iscsi.k8straining:targetdsystemctl start targetd

systemctl enable targetd

systemctl status targetdOutput

● target.service - Restore LIO kernel target configuration

Loaded: loaded (/usr/lib/systemd/system/target.service; enabled; vendor preset: disabled)

Active: active (exited) since Wed 2019-02-06 13:12:58 EST; 10s ago

Main PID: 15795 (code=exited, status=0/SUCCESS)

Feb 06 13:12:58 iscsi.k8straining.com systemd[1]: Starting Restore LIO kernel target configuration...

Feb 06 13:12:58 iscsi.k8straining.com target[15795]: No saved config file at /etc/target/saveconfig.json, ok, exiting

Feb 06 13:12:58 iscsi.k8straining.com systemd[1]: Started Restore LIO kernel target configuration.

On each worker nodes

Make sure the iqn is present

$ sudo vi /etc/iscsi/initiatorname.iscsi$ sudo systemctl status iscsid

$ sudo systemctl restart iscsid$ kubectl create secret generic targetd-account --from-literal=username=admin --from-literal=password=nutanixwget https://raw.githubusercontent.com/ansilh/kubernetes-the-hardway-virtualbox/master/config/iscsi-provisioner-d.yamlvi iscsi-provisioner-d.yamlModify TARGETD_ADDRESS to the targetd server address.

wget https://raw.githubusercontent.com/ansilh/kubernetes-the-hardway-virtualbox/master/config/iscsi-provisioner-pvc.yamlwget https://raw.githubusercontent.com/ansilh/kubernetes-the-hardway-virtualbox/master/config/iscsi-provisioner-class.yamlvi iscsi-provisioner-class.yamltargetPortal -> 10.136.102.168

iqn -> iqn.2003-01.org.linux-iscsi.k8straining:targetd

initiators -> iqn.1993-08.org.debian:01:k8s-worker-ah-01,iqn.1993-08.org.debian:01:k8s-worker-ah-02,iqn.1993-08.org.debian:01:k8s-worker-ah-03$ kubectl create -f iscsi-provisioner-d.yaml -f iscsi-provisioner-pvc.yaml -f iscsi-provisioner-class.yaml$ kubectl get allNAME READY STATUS RESTARTS AGE

pod/iscsi-provisioner-6c977f78d4-gxb2x 1/1 Running 0 33s

pod/nginx-deployment-76bf4969df-d74ff 1/1 Running 0 147m

pod/nginx-deployment-76bf4969df-rfgfj 1/1 Running 0 147m

pod/nginx-deployment-76bf4969df-v4pq5 1/1 Running 0 147m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 172.168.0.1 <none> 443/TCP 4d3h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/iscsi-provisioner 1/1 1 1 33s

deployment.apps/nginx-deployment 3/3 3 3 3h50m

NAME DESIRED CURRENT READY AGE

replicaset.apps/iscsi-provisioner-6c977f78d4 1 1 1 33s

replicaset.apps/nginx-deployment-76bf4969df 3 3 3 3h50m

replicaset.apps/nginx-deployment-779fcd779f 0 0 0 155m$ kubectl get pvcOutput

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

myclaim Bound pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343 100Mi RWO iscsi-targetd-vg-targetd 4s# targetcli lsWarning: Could not load preferences file /root/.targetcli/prefs.bin.

o- / ......................................................................................................................... [...]

o- backstores .............................................................................................................. [...]

| o- block .................................................................................................. [Storage Objects: 1]

| | o- vg-targetd:pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343 [/dev/vg-targetd/pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343 (100.0MiB) write-thru activated]

| | o- alua ................................................................................................... [ALUA Groups: 1]

| | o- default_tg_pt_gp ....................................................................... [ALUA state: Active/optimized]

| o- fileio ................................................................................................. [Storage Objects: 0]

| o- pscsi .................................................................................................. [Storage Objects: 0]

| o- ramdisk ................................................................................................ [Storage Objects: 0]

o- iscsi ............................................................................................................ [Targets: 1]

| o- iqn.2003-01.org.linux-iscsi.k8straining:targetd ................................................................... [TPGs: 1]

| o- tpg1 ............................................................................................... [no-gen-acls, no-auth]

| o- acls .......................................................................................................... [ACLs: 3]

| | o- iqn.1993-08.org.debian:01:k8s-worker-ah-01 ........................................................... [Mapped LUNs: 1]

| | | o- mapped_lun0 ................................... [lun0 block/vg-targetd:pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343 (rw)]

| | o- iqn.1993-08.org.debian:01:k8s-worker-ah-02 ........................................................... [Mapped LUNs: 1]

| | | o- mapped_lun0 ................................... [lun0 block/vg-targetd:pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343 (rw)]

| | o- iqn.1993-08.org.debian:01:k8s-worker-ah-03 ........................................................... [Mapped LUNs: 1]

| | o- mapped_lun0 ................................... [lun0 block/vg-targetd:pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343 (rw)]

| o- luns .......................................................................................................... [LUNs: 1]

| | o- lun0 [block/vg-targetd:pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343 (/dev/vg-targetd/pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343) (default_tg_pt_gp)]

| o- portals .................................................................................................... [Portals: 1]

| o- 0.0.0.0:3260 ..................................................................................................... [OK]

o- loopback ......................................................................................................... [Targets: 0]lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home centos -wi-ao---- <41.12g

root centos -wi-ao---- 50.00g

swap centos -wi-ao---- <7.88g

pvc-2a484a72-2a3e-11e9-aa2d-506b8db54343 vg-targetd -wi-ao---- 100.00mMore details can be found in below URLs

https://github.com/kubernetes-incubator/external-storage/tree/master/iscsi/targetd https://github.com/kubernetes-incubator/external-storage/tree/master/iscsi/targetd/kubernetes

In this setup we are assuming that the firewall and SELinux were disabled.

$ sudo yum install nfs-utils$ sudo mkdir /share$ sudo vi /etc/exports/share *(rw,sync,no_root_squash,no_all_squash)$ sudo systemctl restart nfs-server<NFS_SERVER_IP>apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: <NFS_SERVER_IP> <<<----- Replace this with NFS server IP

- name: NFS_PATH

value: /share

volumes:

- name: nfs-client-root

nfs:

server: <NFS_SERVER_IP> <<<----- Replace this with NFS server IP

path: /ifs/kubernetesapiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.iokind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mikind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: gcr.io/google_containers/busybox:1.24

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && sleep 1000 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim The Pod will execute a sleep command for 1000 seconds. You can login to the Pod and verify the volume mount.