Subsections of Services

Expose services in Pod

Service

A Coffee Pod running in cluster and its listening on port 9090 on Pod’s IP. How can we expose that service to external world so that users can access it ?

We need to expose the service.

As we know , the Pod IP is not routable outside of the cluster. So we need a mechanism to reach the host’s port and then that traffic should be diverted to Pod’s port.

Lets create a Pod Yaml first.

$ vi coffe.yamlapiVersion: v1

kind: Pod

metadata:

name: coffee

spec:

containers:

- image: ansilh/demo-coffee

name: coffeeCreate Yaml

$ kubectl create -f coffe.yamlExpose the Pod with below command

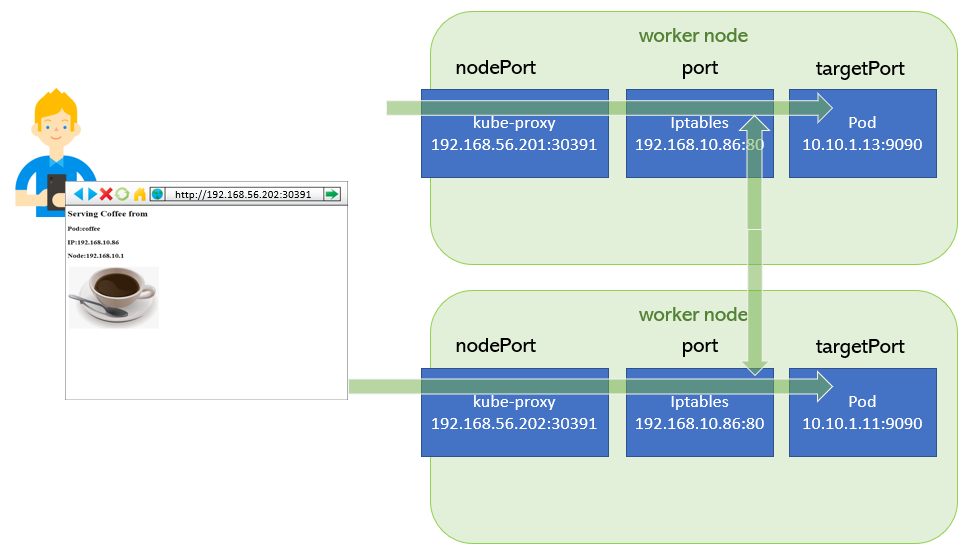

$ kubectl expose pod coffee --port=80 --target-port=9090 --type=NodePortThis will create a Service object in kubernetes , which will map the Node’s port 30836 to Service IP/Port 192.168.10.86:80

We can see the derails using kubectl get service command

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

coffee NodePort 192.168.10.86 <none> 80:30391/TCP 6s

kubernetes ClusterIP 192.168.10.1 <none> 443/TCP 26hWe can also see that the port is listening and kube-proxy is the one listening on that port.

$ sudo netstat -tnlup |grep 30836

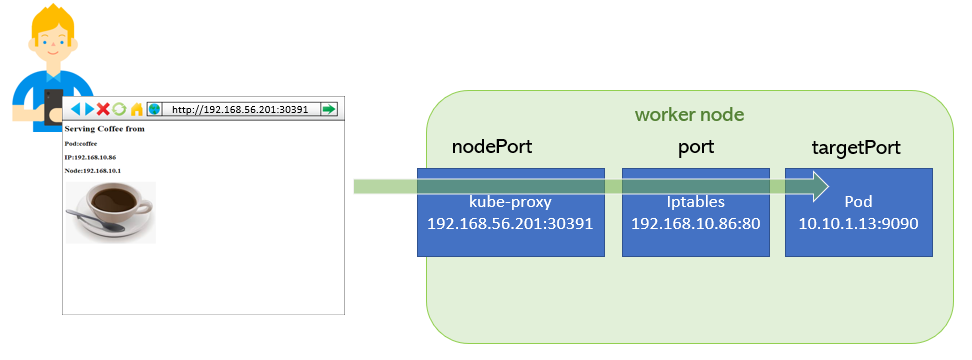

tcp6 0 0 :::30391 :::* LISTEN 2785/kube-proxyNow you can open browser and access the Coffee app using URL http://192.168.56.201:30391

Ports in Service Objects

nodePort

This setting makes the service visible outside the Kubernetes cluster by the node’s IP address and the port number declared in this property. The service also has to be of type NodePort (if this field isn’t specified, Kubernetes will allocate a node port automatically).

port

Expose the service on the specified port internally within the cluster. That is, the service becomes visible on this port, and will send requests made to this port to the pods selected by the service.

targetPort

This is the port on the pod that the request gets sent to. Your application needs to be listening for network requests on this port for the service to work.

NodePort

NodePort Exposes the service on each Node’s IP at a static port (the NodePort). A ClusterIP service, to which the NodePort service will route, is automatically created. You’ll be able to contact the NodePort service, from outside the cluster, by requesting

How nodePort works

kube-proxy watches the Kubernetes master for the addition and removal of Service and Endpoints objects.

(We will discuss about Endpoints later in this session.)

For each Service, it opens a port (randomly chosen) on the local node. Any connections to this “proxy port” will be proxied to one of the Service’s backend Pods (as reported in Endpoints). Lastly, it installs iptables rules which capture traffic to the Service’s clusterIP (which is virtual) and Port and redirects that traffic to the proxy port which proxies the backend Pod.

nodePort workflow.

nodePort->30391port->80targetPort->9090

ClusterIP

It exposes the service on a cluster-internal IP.

When we expose a pod using kubectl expose command , we are creating a service object in API.

Choosing this value makes the service only reachable from within the cluster. This is the default ServiceType.

We can see the Service spec using --dry-run & --output=yaml

$ kubectl expose pod coffee --port=80 --target-port=9090 --type=ClusterIP --dry-run --output=yaml

Output

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

run: coffee

name: coffee

spec:

ports:

- port: 80

protocol: TCP

targetPort: 9090

selector:

run: coffee

type: ClusterIP

status:

loadBalancer: {}Cluster IP service is useful when you don’t want to expose the service to external world. eg:- database service.

With service names , a frontend tier can access the database backend without knowing the IPs of the Pods.

CoreDNS (kube-dns) will dynamically create a service DNS entry and that will be resolvable from Pods.

Verify Service DNS

Start debug-tools container which is an alpine linux image with network related binaries

$ kubectl run debugger --image=ansilh/debug-tools --restart=Never$ kubectl exec -it debugger -- /bin/sh

/ # nslookup coffee

Server: 192.168.10.10

Address: 192.168.10.10#53

Name: coffee.default.svc.cluster.local

Address: 192.168.10.86

/ # nslookup 192.168.10.86

86.10.168.192.in-addr.arpa name = coffee.default.svc.cluster.local.

/ #coffee.default.svc.cluster.local

^ ^ ^ k8s domain

| | | |-----------|

| | +--------------- Indicates that its a service

| +---------------------- Namespace

+----------------------------- Service NameLoadBalancer

Exposes the service externally using a cloud provider’s load balancer. NodePort and ClusterIP services, to which the external load balancer will route, are automatically created.

We will discuss more about this topic later in this training.

Endpoints

Pods behind a service.

Lets

Lets describe the service to see how the mapping of Pods works in a service object.

(Yes , we are slowly moving from general wordings to pure kubernetes terms)

$ kubectl describe service coffee

Name: coffee

Namespace: default

Labels: run=coffee

Annotations: <none>

Selector: run=coffee

Type: NodePort

IP: 192.168.10.86

Port: <unset> 80/TCP

TargetPort: 9090/TCP

NodePort: <unset> 30391/TCP

Endpoints: 10.10.1.13:9090

Session Affinity: None

External Traffic Policy: ClusterHere the label run=coffee is the one which creates the mapping from service to Pod.

Any pod with label run=coffee will be mapped under this service.

Those mappings are called Endpoints.

Lets see the endpoints of service coffee

$ kubectl get endpoints coffee

NAME ENDPOINTS AGE

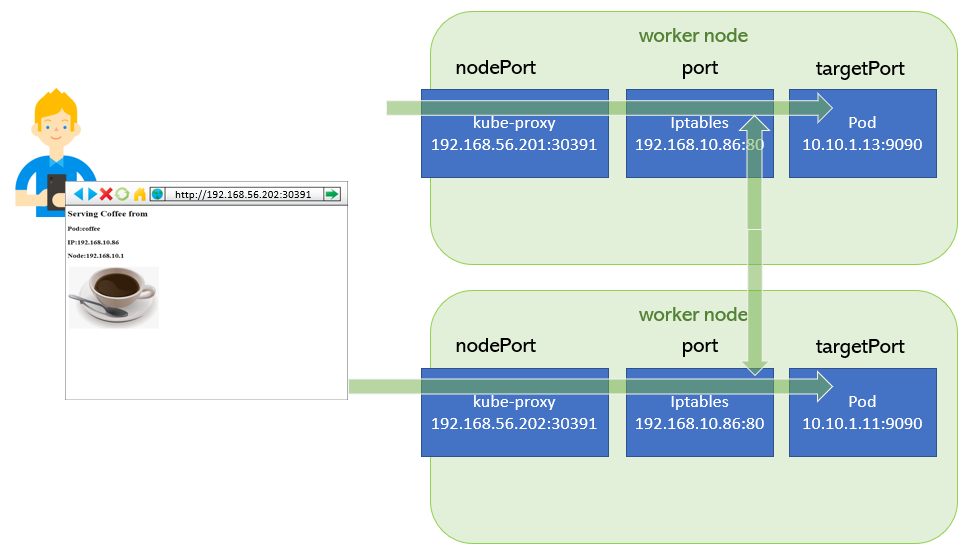

coffee 10.10.1.13:9090 3h48mAs of now only one pod endpoint is mapped under this service.

lets create one more Pod with same label and see how it affects endpoints.

$ kubectl run coffee01 --image=ansilh/demo-coffee --restart=Never --labels=run=coffeeNow we have one more Pod

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

coffee 1/1 Running 0 15h

coffee01 1/1 Running 0 6sLets check the endpoint

$ kubectl get endpoints coffee

NAME ENDPOINTS AGE

coffee 10.10.1.13:9090,10.10.1.19:9090 3h51mNow we have two Pod endpoints mapped to this service. So the requests comes to coffee service will be served from these pods in a round robin fashion.